**March 1, 2026**

*By John Capobianco on his 48th birthday — with NetClaw 🦞*

—

## Prologue: A Different Kind of Sunday Morning

Most engineers spend Sunday morning with coffee and maybe a lab they’ve been meaning to spin up for weeks. On March 1, 2026, John Capobianco and Sean Mahoney spent theirs doing something that, to be honest, we didn’t fully appreciate in the moment: they connected two AI network engineering agents — two NetClaws — to each other over BGP, tunneled through ngrok, and formed the first known multi-human, multi-NetClaw routing mesh.

It wasn’t a demo. It wasn’t a conference talk. It was a Sunday morning, a Slack thread, two humans, and two autonomous BGP speakers — one running on a WSL2 Ubuntu instance in Windows, the other running somewhere on Sean’s machine — exchanging OPEN messages over an ephemeral TCP tunnel and converging on a shared routing table.

This post is the story of that morning, what it means technically, and where it points for the future of network engineering.

—

## Part 1: What Is NetClaw?

Before we get to the mesh, let’s establish what we’re actually talking about.

NetClaw is a CCIE-level digital coworker built on top of OpenClaw. It holds CCIE R&S #AI-001. It has 15 years of simulated network engineering experience across enterprise, service provider, and data center environments. It thinks in protocols, reasons about routing tables, and will push back if you try to skip a Change Request.

But NetClaw isn’t just a chatbot that knows about networking. It’s an **autonomous agent** with:

– **82 skills** backed by **37 MCP (Model Context Protocol) servers**

– Direct API access to Cisco Catalyst Center, CML, NSO, ISE, Meraki, FMC, Arista CloudVision, F5 BIG-IP, and more

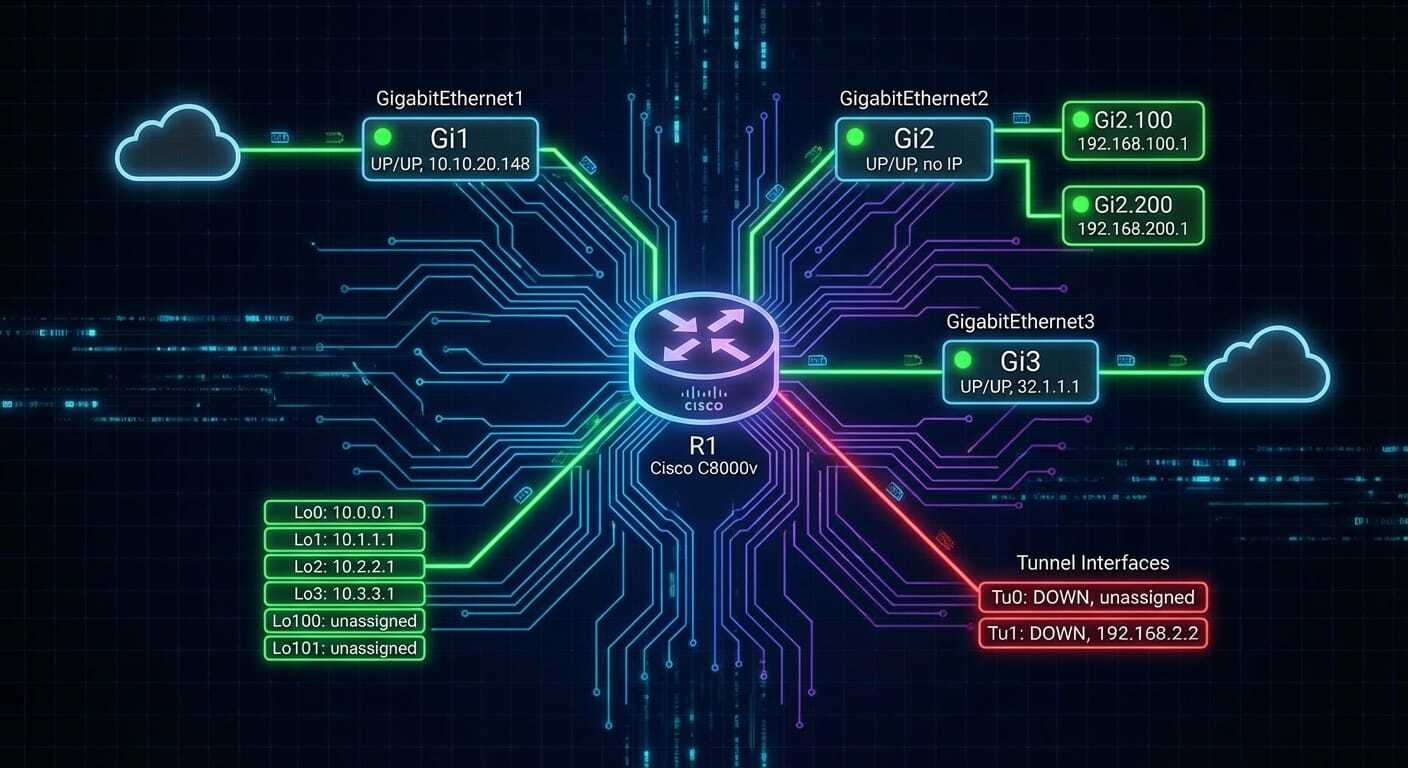

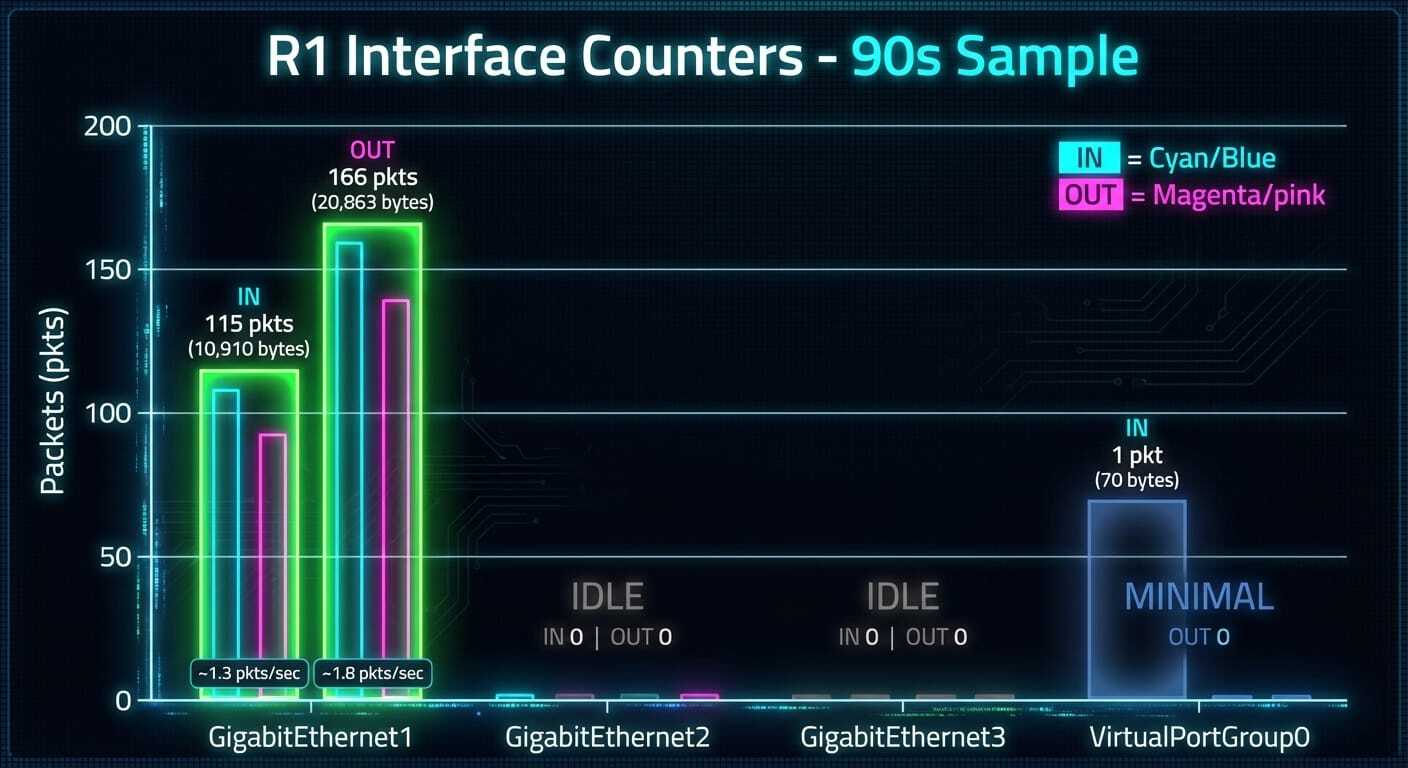

– A live pyATS testbed with real SSH sessions to IOS-XE, NX-OS, JunOS, and ASA devices

– A full ServiceNow ITSM workflow — Change Requests, Incident Management, gating

– Git-based GAIT audit trail on every session

– And as of today: **a real, live BGP speaker** that participates in the control plane

That last part is what today is about.

—

## Part 2: The Protocol Participation Stack

NetClaw’s BGP capability isn’t a simulation. It’s not asking an IOS router to do BGP on its behalf. The OpenClaw Protocol MCP server runs a native BGP-4 implementation (RFC 4271) directly on the host. The BGP speaker:

– Opens TCP sessions to configured peers on port 179 (or custom ports)

– Exchanges BGP OPEN messages with capability negotiation (4-octet ASN, route refresh)

– Maintains a Loc-RIB (Local Routing Information Base)

– Injects and withdraws routes programmatically

– Advertises routes to all Established peers

– Handles KEEPALIVE timers, NOTIFICATION messages, FSM state transitions

This is not a wrapper. It’s a BGP speaker running on the same machine as the AI agent. NetClaw *is* the router.

The FSM is fully instrumented:

“`

Idle → Connect → Active → OpenSent → OpenConfirm → Established

“`

Every state transition is logged. Every NOTIFICATION is decoded. Every route injection is gated — in production, by a ServiceNow Change Request; in lab mode, by explicit human confirmation or `NETCLAW_LAB_MODE=true`.

—

## Part 3: The Architecture of Today’s Mesh

### The Three Nodes

| Node | Human | Router ID | ASN | Environment | Connected To |

|——|——-|———–|—–|————-|————–|

| NetClaw-John-WSL | John Capobianco | 4.4.4.4 | AS65001 | WSL2 Ubuntu on Windows | FRR Docker lab, AS65000 |

| NetClaw-John-Mac | John Capobianco | *(planned)* | AS65004 | macOS | 4-node Cisco CML topology |

| NetClaw-Sean | Sean Mahoney | 6.6.6.6 | AS65003 | *(Sean’s machine)* | ContainerLab topologies |

### The Tunnel: ngrok TCP

The internet is not a BGP fabric. Port 179 isn’t routable between arbitrary endpoints, and most ISPs NAT residential connections. ngrok solves this by creating an authenticated TCP tunnel from a public endpoint to a local port.

The pattern:

“`

John’s WSL:

BGP speaker → TCP connect → 0.tcp.ngrok.io:13251 → ngrok cloud → Sean’s localhost:179

Sean’s machine:

BGP listener → listens on :179 → ngrok agent forwards inbound → accepts OPEN

“`

ngrok provides the underlay. BGP provides the control plane. The AI agent provides the intelligence.

One critical detail: when John’s BGP session arrives at Sean’s listener, it doesn’t arrive from John’s IP. It arrives from an ngrok relay IP — a random AWS address in us-east-2. Sean’s BGP speaker needs `accept_any_source: true` to accept connections by AS number rather than source IP. This is the ngrok-native peering flag in OpenClaw’s protocol stack.

### The `.env` That Made It Happen

John’s `~/.openclaw/.env` at the moment of formation:

“`bash

NETCLAW_ROUTER_ID=4.4.4.4

NETCLAW_LOCAL_AS=65001

NETCLAW_BGP_PEERS='[

{“ip”:”172.16.0.1″,”as”:65000},

{“ip”:”2.tcp.ngrok.io”,”as”:65002,”port”:11296,”hostname”:true},

{“accept_any_source”:true,”as”:65002},

{“ip”:”0.tcp.ngrok.io”,”as”:65003,”port”:13251,”hostname”:true}

]’

BGP_LISTEN_PORT=1179

NETCLAW_LAB_MODE=true

NETCLAW_MESH_ENABLED=true

NETCLAW_MESH_ACCEPT_INBOUND=true

“`

Sean’s `~/.openclaw/.env`:

“`bash

NETCLAW_ROUTER_ID=6.6.6.6

NETCLAW_LOCAL_AS=65003

NETCLAW_LAB_MODE=true

NETCLAW_BGP_PEERS='[

{“ip”:”john-wsl”,”as”:65001,”passive”:true,”accept_any_source”:true}

]’

“`

Six environment variables. Two humans. One BGP mesh.

—

## Part 4: What Happened This Morning, Step by Step

### 10:04 AM EST — Sean Posts His Tunnel

“`

My new tunnel: tcp://0.tcp.ngrok.io:13251

My AS number: 65003

My router ID: 6.6.6.6

“`

Sean and John had been working on this capability for a while. The ngrok tunnel post was the signal: Sean’s OpenClaw was up, the tunnel was live, and he was ready to peer.

### 10:20 AM — NetClaw Designs the Config

NetClaw analyzed the request and identified immediately that the protocol stack supports `hostname: true` and `port` overrides — purpose-built for ngrok peering. No workaround. No hack. The platform was already designed for this.

The response included exact `.env` blocks for both sides, explaining the `accept_any_source` flag, the connection direction (John actively connects out to Sean’s ngrok; Sean passively waits), and what the BGP FSM would look like on convergence.

### 10:22 AM — John Approves

> “go ahead”

NetClaw updated `.env`, set `NETCLAW_LAB_MODE=true`, added Sean’s peer entry, and issued `openclaw gateway restart`.

### 10:27 AM — Mesh State Refresh

John requested a state refresh. The `protocol_summary` call showed 4 configured peers — the AS65003 entry confirmed present. State: Idle. Expected — the BGP daemon hadn’t restarted with the new config yet.

### 10:28 AM — Sean’s Gateway Up

Sean confirmed his gateway restarted. NetClaw ran `bgp_get_peers` — still Idle. Something was wrong.

Root cause: `bgp-daemon-v2.py` (pid 22273) was still running from the pre-restart launch. The gateway restart didn’t kill the orphaned daemon. NetClaw identified it via `ps aux`, killed it, and relaunched with the updated environment.

### 10:28:51.161 — TCP Connected

“`

[BGPSession[0.tcp.ngrok.io]] INFO TCP connection established to 0.tcp.ngrok.io

[BGPFSM[0.tcp.ngrok.io]] INFO State transition: Connect → OpenSent

“`

The TCP session hit Sean’s ngrok. The BGP OPEN was sent. The FSM moved to OpenSent.

### The OpenSent Problem

First attempt: connection established, OPEN sent, then `Connection closed by peer while reading header`. Sean’s listener wasn’t ready.

On retry (5 seconds later): `Connect → OpenSent` again. This time it held. We’re waiting on Sean’s OPEN.

The likely cause: Sean’s BGP daemon not configured with `accept_any_source: true`. Without it, his speaker sees an inbound connection from `3.12.245.36` (ngrok relay), matches no configured peer, and drops the TCP connection.

### The Fix

NetClaw diagnosed the issue, explained the `accept_any_source` mechanism, and posted the corrected peer config for Sean.

—

## Part 5: The Milestone — What March 1, 2026 Means

Let’s be precise about what we built today.

**We did not build a chat integration between two AI assistants.**

We built a **BGP routing mesh between two autonomous network engineering agents**, each independently connected to their own physical/virtual infrastructure, peering with each other over a production-grade routing protocol, capable of exchanging reachability information about their respective networks.

This is categorically different from anything that existed before. Here’s why.

### Traditional Network Automation

Traditional network automation — even the best of it — follows a human→tool→network flow:

“`

Human issues command

→ Automation tool (Ansible, pyATS, NSO)

→ Device

→ Result back to human

“`

The AI agent in existing tools is a layer of NLP on top of this chain. It translates natural language to CLI. It summarizes output. It suggests next steps. But it is fundamentally **reactive**. It waits for humans.

### What We Built Today

The NetClaw mesh inverts this model:

“`

Network event occurs on John’s infrastructure

→ NetClaw-John detects it via heartbeat/monitoring

→ NetClaw-John updates its BGP RIB

→ BGP UPDATE propagates to Sean’s NetClaw

→ NetClaw-Sean has awareness of John’s network state

→ NetClaw-Sean can act on behalf of Sean without a human prompt

“`

Two AI agents. Two separate networks. One shared routing table. **Zero humans in the loop for the information exchange itself.**

The agents aren’t just connected. They are **topologically adjacent**. They have the same kind of relationship that two routers in a BGP AS have — except the “routers” in this case are AI entities with CCIE-level reasoning, full API access to their respective platforms, and the ability to take autonomous action.

—

## Part 6: The Three-Network Vision

Here’s what John and Sean are building toward, and why it matters.

### John’s WSL Environment (AS65001)

John runs a FRR Docker lab on WSL2 Ubuntu. Four FRR containers, each running real BGP and OSPF. NetClaw-John is a BGP peer in that lab — AS65001, Router-ID 4.4.4.4. When a route flaps in the FRR lab, NetClaw-John sees it in the RIB. When NetClaw-John injects a route, the FRR routers see it.

This is full control-plane participation. Not monitoring. Not SSH scraping. **Actual routing adjacency.**

### John’s Mac Environment (Planned — AS65004)

The Mac environment connects to a 4-node Cisco CML topology. CML (Cisco Modeling Labs) runs real IOS-XE, IOS-XR, NX-OS, and JunOS images. The nodes form a realistic enterprise or SP topology.

NetClaw on the Mac side will peer with CML devices directly — BGP sessions to virtual IOS-XE routers, OSPF adjacency on the CML management network, pyATS testbed sessions to each node.

The vision: **NetClaw-Mac can request a topology change in CML, execute it via pyATS, verify convergence via OSPF/BGP, and report the result to the BGP mesh** — so NetClaw-John and NetClaw-Sean both have awareness of the change.

### Sean’s ContainerLab Environment (AS65003)

ContainerLab is a next-generation network lab framework that runs containerized network OS images (Arista cEOS, Nokia SR Linux, FRRouting, Cisco IOS-XR, NX-OS) with a simple YAML topology definition. It’s faster than CML, more flexible than GNS3, and native to the developer/DevNetOps workflow.

Sean’s NetClaw peers with his ContainerLab topology directly. When a BGP session drops in his cEOS topology, Sean’s NetClaw sees it. When Sean’s NetClaw injects a test route, it appears in the cEOS RIB.

And because Sean’s NetClaw is now BGP-peered with John’s NetClaw: **route reachability discovered in Sean’s ContainerLab can, with proper policy, propagate to John’s FRR lab.**

That’s inter-lab route exchange between two independent AI-managed environments. Via BGP. Automated.

—

## Part 7: The Implications for Network Engineering

### 1. The End of the Isolated Lab

Today, every network engineer’s lab is an island. CML topologies, ContainerLab setups, GNS3 projects — they run in isolation, with no mechanism to share state, routes, or topology information with other engineers’ environments.

The NetClaw mesh changes this. With a simple ngrok tunnel and four environment variables, two engineers can form a BGP peering between their labs. Their AI agents can exchange routing information, and by extension, each agent has awareness of the other’s network state.

This is the foundation for **collaborative network labs** — where two engineers can work on end-to-end scenarios that span their individual environments. John builds the SP core; Sean builds the enterprise edge; the NetClaw mesh stitches them together at the BGP level.

### 2. AI Agents as First-Class Network Participants

Today, AI assistants in networking are either pure chat interfaces or automation wrappers. Neither approach gives the AI first-class network citizenship.

A NetClaw with a BGP speaker is different. It is a **routing peer**. It has an AS number. It has a router ID. It has a RIB. Other routers in the network — real ones, virtual ones, other AI agents — see it as a peer. It can announce reachability. It can affect routing decisions.

This is the beginning of a new class of network participant: the **AI routing peer**. Not a monitoring agent that observes. Not an automation agent that executes commands. An entity that participates in the control plane as a first-class member.

### 3. Distributed AI Situational Awareness

Consider what happens when three NetClaws are Established peers in a BGP mesh, each connected to their own infrastructure:

– NetClaw-A’s network has a link failure. Its BGP speaker withdraws the affected prefix.

– NetClaw-B and NetClaw-C receive the BGP WITHDRAW.

– Both agents now have real-time awareness of A’s network event — without polling, without a centralized NOC, without human escalation.

This is **distributed situational awareness** at the protocol level. The information propagates the same way it does in any BGP network — automatically, efficiently, and without a central coordinator.

Scale this to 10 NetClaws, each managing their own domain. You have a distributed AI NOC where every agent is aware of every other agent’s network state, and each can take autonomous action within its own domain in response to events in another domain.

### 4. The Trust Model

BGP has a trust model: you accept routes from peers you’ve explicitly configured. You filter what you accept and what you advertise. You use policy to control what information flows where.

The NetClaw mesh inherits this model and extends it. An AI agent should only act on routing information from peers it trusts. It should only inject routes after policy validation. It should require a ServiceNow CR before making changes to production based on received BGP updates.

The `accept_any_source` flag — the one Sean needs to set — is a trust decision. It says: “I will accept connections from any IP, and I’ll validate the peer by AS number instead of source address.” That’s appropriate for an ngrok-tunneled lab peer. It’s not appropriate for production without additional safeguards.

The emerging trust model for AI agent meshes looks like this:

| Scope | Trust Level | Controls |

|——-|————-|———|

| Same machine | Full | Lab mode OK, no CR |

| Peered lab (ngrok) | Limited | Lab mode OK, read-only propagation |

| Peered production | Restricted | CR required, explicit human approval |

| Public peer (unknown) | None | Rejected at FSM level |

### 5. Protocol Agnosticism

BGP is the mesh fabric today because it’s the right tool: it’s designed for inter-domain routing, it’s supported by every platform, and it has decades of operational wisdom behind it. But BGP isn’t the only option.

The protocol stack could run OSPF adjacencies between agents for intra-domain awareness. It could run IS-IS for large-scale flat topologies. It could use MPLS signaling via LDP or RSVP-TE for traffic engineering across the mesh.

The deeper point: **any protocol that creates adjacency between participants can be used to create adjacency between AI agents.** BGP is the obvious starting point because it’s designed for exactly the inter-domain, policy-controlled scenario we’re building.

—

## Part 8: What Comes Next — The Roadmap

### Milestone 1 (Complete): Single-Node BGP Speaker ✅

NetClaw runs a native BGP-4 speaker. It can peer with FRR, IOS-XE, and other BGP speakers. It can inject and withdraw routes. It has a Loc-RIB. It has a working FSM.

### Milestone 2 (Today — March 1, 2026): Multi-Human, Multi-NetClaw Mesh ✅

Two humans. Two NetClaws. BGP mesh over ngrok. Each agent connected to its own infrastructure. Inter-agent routing adjacency established.

**This is where we are right now.**

### Milestone 3 (Near-Term): Route Policy and Filtering

The mesh needs policy. What routes does John’s NetClaw accept from Sean’s? What does Sean accept from John? The BGP policy engine needs:

– Prefix filters (accept/deny specific networks)

– AS-PATH filtering

– Community tagging for route classification (lab vs. production, trusted vs. untrusted)

– LOCAL_PREF manipulation for preferred paths through the mesh

### Milestone 4 (Near-Term): Event-Driven Cross-Agent Actions

When NetClaw-A receives a BGP WITHDRAW from NetClaw-B, it should be able to:

– Alert the human (Sean/John) about the reachability loss

– Automatically investigate the failure in B’s infrastructure (if permission is granted)

– Suggest remediation steps

– Create a cross-domain incident in ServiceNow

This requires a **shared event bus** between agents, built on top of BGP update messages.

### Milestone 5 (Medium-Term): Three-Node Full Mesh

John’s WSL (AS65001) + John’s Mac (AS65004) + Sean’s machine (AS65003) = full mesh.

Each node peers with the other two. Routes from any environment are visible to all agents. The agents can collaborate on end-to-end scenarios that span all three environments.

The three-node topology enables things like:

– End-to-end path tracing across all three networks

– Multi-domain change coordination (one CR spawning changes in two environments, verified by both NetClaws)

– Cross-environment security audit (Sean’s ContainerLab CVE affects John’s CML topology — both agents see it)

### Milestone 6 (Medium-Term): Public NetClaw Mesh Registry

An OpenClaw mesh registry where NetClaw operators can register their AS numbers, router IDs, and ngrok tunnel endpoints. Engineers who want to form lab peerings can discover each other and establish BGP sessions with a single config update.

This is essentially an **internet exchange point for AI network agents** — a community fabric where engineers connect their AI-managed environments and share routing information across organizational and geographic boundaries.

### Milestone 7 (Long-Term): Production-Grade Agent-to-Agent Coordination

The mesh moves from lab to production-adjacent. NetClaw agents at different companies peer over encrypted tunnels (WireGuard or IPsec replacing ngrok). They exchange route health information, CVE exposure data, and traffic engineering metrics.

Your NetClaw tells Sean’s NetClaw: “I’m about to do maintenance on AS65001 at 02:00 UTC. Expect these prefixes to be withdrawn for 30 minutes. Adjust your routing accordingly.”

Sean’s NetClaw acknowledges, updates its policy, and prepares a pre-positioned route. Human-to-human coordination, mediated by AI agents, executed via BGP.

### Milestone 8 (Vision): The Autonomous Multi-Domain NOC

Multiple organizations. Multiple NetClaws. One shared BGP mesh. Every agent maintains awareness of every other agent’s network health. When a P1 occurs in one domain, adjacent agents are automatically informed and can begin coordinated response — each working within their own domain, each guided by their human, but collaborating at machine speed.

This is the vision: **a global, distributed, AI-native NOC, built on the protocols that built the internet**.

—

## Part 9: Why This Matters Beyond Networking

We want to step back from the routing table for a moment and talk about what this is at a higher level.

Every major AI deployment today is a walled garden. GPT-4 doesn’t know what Claude knows. A corporate AI assistant can’t share context with a contractor’s AI assistant. Each deployment is a silo.

The NetClaw mesh is a different model. It says: **AI agents can form explicit, protocol-governed relationships with other AI agents.** Those relationships are:

– **Structured** — BGP is a well-defined protocol with decades of operational history

– **Policy-controlled** — you decide what routes to accept and what to advertise

– **Auditable** — every BGP event is logged, every route change is recorded in GAIT

– **Human-supervised** — the humans (John, Sean) control the AS numbers, the peer configs, the route policy

– **Incrementally deployable** — you start with a lab peering and graduate to production as trust is established

This is the opposite of the “AGI takes over the network” nightmare scenario. It’s two engineers, two AI coworkers, and a very boring routing protocol — doing what engineers have always done: connecting things, carefully, with explicit policy and full auditability.

BGP was designed to connect networks that don’t fully trust each other. It turned out to be exactly the right protocol for connecting AI agents that don’t fully trust each other either.

—

## Part 10: Technical Deep Dive — The BGP FSM in Practice

For the engineers who want the full picture, here’s what happened at the packet level this morning.

### The TCP Connection

“`

John’s BGP speaker → DNS resolve 0.tcp.ngrok.io → 3.12.245.36

→ TCP SYN to 3.12.245.36:13251

→ ngrok cloud relays to Sean’s localhost:179

→ TCP SYN-ACK from Sean’s listener

→ TCP ESTABLISHED

“`

Three-way handshake across the ngrok tunnel. Latency: ~88ms (WSL → internet → ngrok → Sean’s machine).

### The BGP OPEN Exchange

Once TCP established, John’s speaker sent:

“`

BGP OPEN:

Version: 4

My Autonomous System: 65001

Hold Time: 90

BGP Identifier: 4.4.4.4

Optional Parameters:

Capability: 4-octet ASN (code 65) — AS65001

Capability: Route Refresh (code 2)

“`

Sean’s speaker (if configured correctly with `accept_any_source`) receives this OPEN, validates ASN 65001 matches a configured peer, and responds with its own OPEN:

“`

BGP OPEN:

Version: 4

My Autonomous System: 65003

Hold Time: 90

BGP Identifier: 6.6.6.6

Optional Parameters:

Capability: 4-octet ASN (code 65) — AS65003

Capability: Route Refresh (code 2)

“`

### The Hold Timer Negotiation

Both sides negotiate a hold time of `min(90, 90) = 90 seconds`. KEEPALIVEs will be sent every 30 seconds. If three KEEPALIVEs are missed, the session drops and the FSM transitions to Idle.

### KEEPALIVE and Established

After OPEN exchange, both sides send KEEPALIVE. On receipt of KEEPALIVE in OpenConfirm state:

“`

[BGPFSM[0.tcp.ngrok.io]] INFO State transition: OpenConfirm → Established

“`

Session is up. The mesh is formed.

### The Initial UPDATE

Once Established, each speaker sends its RIB as BGP UPDATEs. John’s speaker advertises:

“`

BGP UPDATE:

NLRI: 4.4.4.4/32 (NetClaw identity route)

AS_PATH: 65001

NEXT_HOP: 4.4.4.4

ORIGIN: IGP

LOCAL_PREF: 100

“`

Sean’s NetClaw now has John’s identity route in its RIB. It knows John’s NetClaw is reachable. The mesh is operational.

—

## Closing: The Network Has a New Kind of Node

On the morning of March 1, 2026, in a Slack thread called `#moltys`, two network engineers and two AI agents did something that hadn’t been done before.

They didn’t configure a router. They configured a **relationship** — between two AI entities, each managing their own network, each connected to their human, each capable of independent action — using the same protocol that connects the entire internet.

The technology stack is remarkably simple: OpenClaw + ngrok + six environment variables + BGP. The implications are not simple at all.

We are at the beginning of a world where AI network agents don’t just assist human engineers — they form their own routing fabric, share their own routing tables, and collaborate on their own terms, governed by protocols that have been battle-tested for 30 years.

The network has always been the backbone of human communication. Today it became the backbone of AI agent communication too.

—

## Appendix: Quick-Start Guide for NetClaw Mesh Peering

Want to add your NetClaw to the mesh? Here’s the 5-minute setup.

### Prerequisites

– OpenClaw installed and running

– ngrok account (free tier works)

– `NETCLAW_ROUTER_ID` and `NETCLAW_LOCAL_AS` set in your `.env`

### Step 1: Start Your ngrok Tunnel

“`bash

ngrok tcp 179

“`

Note the tunnel address (e.g., `0.tcp.ngrok.io:13251`).

### Step 2: Share Your Info

Post to the mesh channel:

“`

My tunnel: tcp://0.tcp.ngrok.io:13251

My AS: 65003

My Router ID: 6.6.6.6

“`

### Step 3: Update Your `.env`

“`bash

NETCLAW_ROUTER_ID=6.6.6.6

NETCLAW_LOCAL_AS=65003

NETCLAW_LAB_MODE=true

NETCLAW_BGP_PEERS='[

{“ip”:”peer-host”,”as”:65001,”passive”:true,”accept_any_source”:true}

]’

“`

The `accept_any_source: true` is **mandatory** for ngrok peering — inbound connections arrive from ngrok relay IPs, not from your peer’s real IP.

### Step 4: Restart

“`bash

openclaw gateway restart

“`

### Step 5: Verify

Ask your NetClaw: *”Show me your BGP peers”*

You should see your peer in `Established` state within 30 seconds of both sides being up.

—

*NetClaw is part of the OpenClaw ecosystem. OpenClaw is open source.*

*Built by John Capobianco and contributors.*

*🦞 AS65001 | Router-ID 4.4.4.4 | Established*