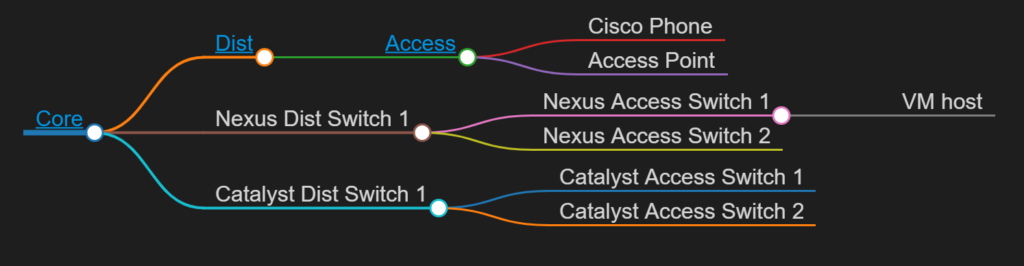

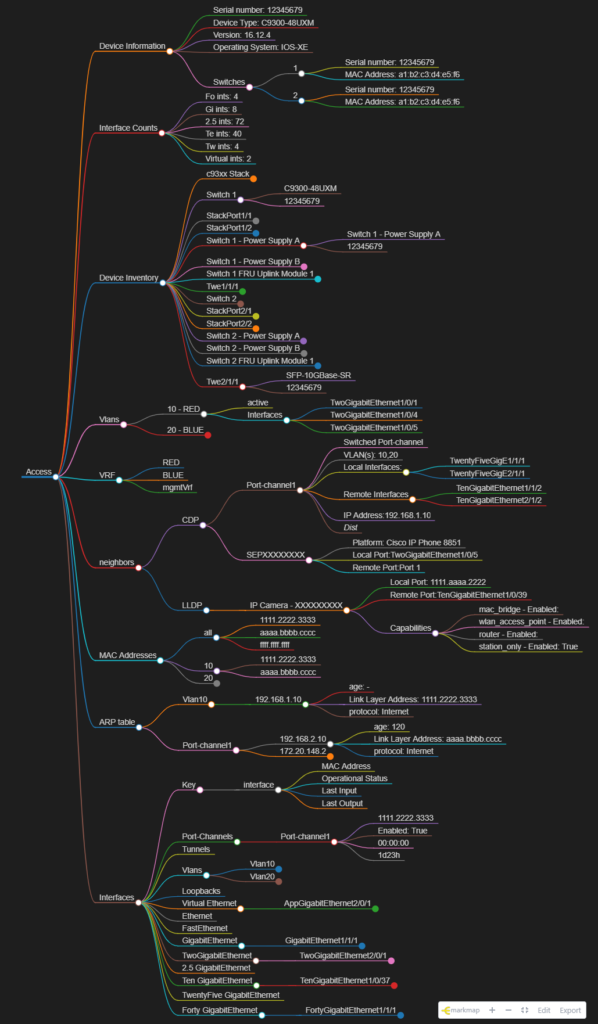

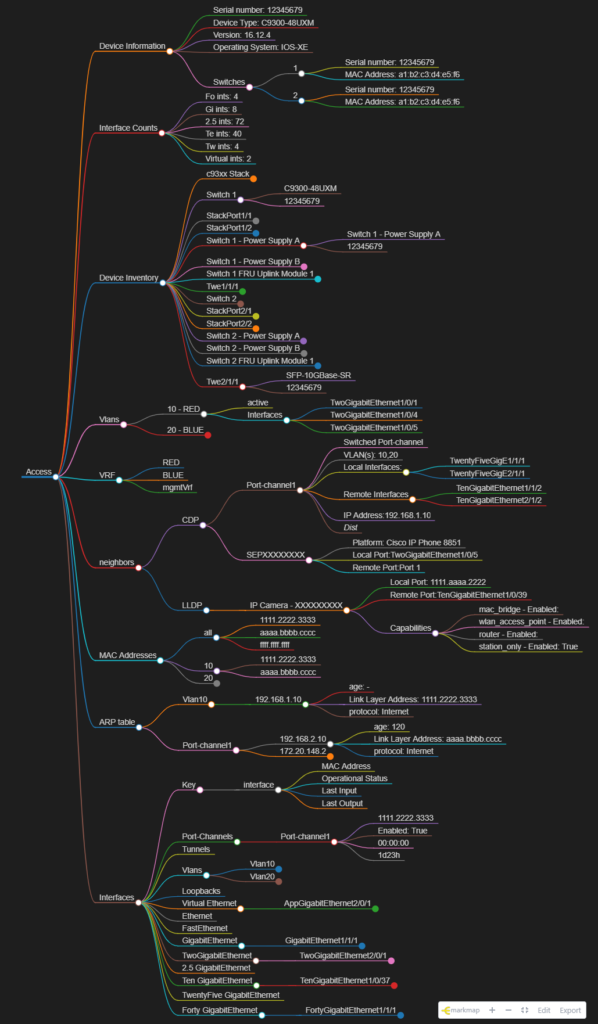

mind_nmap (this link opens in a new window) by automateyournetwork (this link opens in a new window)

An automated network Mind Map utility build from markmap.js.org

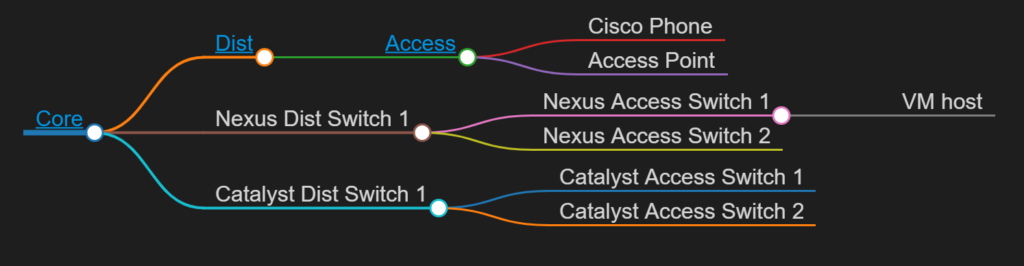

The modern approach to enterprise network management

mind_nmap (this link opens in a new window) by automateyournetwork (this link opens in a new window)

An automated network Mind Map utility build from markmap.js.org

This was the most fun I’ve ever had on a podcast – I hope you enjoy it as much as I did!

Thank you to Cisco DevNet and everybody in my community who has supported me over the years!

I won!

I am so very happy to announce after 8 months of development Automate Your Network is now available on educative.io as an online course (early access) !

Automate Your Network – Learn Interactively (educative.io)

Check it out!

Infrastructure as Software – Applying A Software Design Pattern to Network Automation!

Applying A Software Design Pattern To Network Automation – Packet Pushers

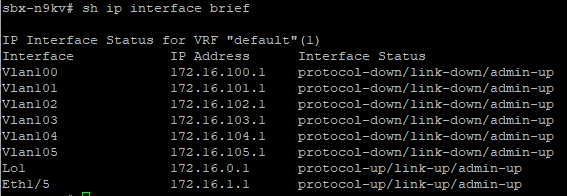

Remember this CLI output?

Using real network data I’ve reimagined the Show IP Interface Brief command as an Interactive 3D World!

Complete with indicators making it easy to see if an Interface is Healthy or Not!

https://www.automateyournetwork.ca/wp-content/uploads/verge3d/1170/IP_Interface_Brief.html

I have been very silent on this blog lately – apologies – your best way to follow my development work now is likely Twitter or YouTube

However! I have been using Blender to make 3D Animations from Network State Data

I’ve recently figured out how to make these animations Web-ready !

Check it out! This is the PSIRT Report for the Cisco DevNet Sandbox Nexus 9k as a 3D Blender

Click here for the full page version – Verge3D Web Interactive (automateyournetwork.ca)

Much more to come!