Now available: Cisco pyATS: Network Test and Automation Solution

The modern approach to enterprise network management

Recap of Part 1

In the first part of this series (Augmenting Network Engineering with RAFT – Automate Your Network ), we dove into the innovative world of Retrieval Augmented Fine-Tuning (RAFT) and its implementation using Cisco’s pyATS and Langchain pipelines. We explored how this methodology leverages network routing information to enrich language models, enhancing their ability to provide precise, contextually relevant answers. By extracting data from network routing tables and converting it into a fine-tuned dataset, we demonstrated how a standard model like chatGPT 3.5 could be transformed into a specialist in network routing.

The fine-tuning process was initially conducted using OpenAI’s cloud services, which, while effective, involved a cost. We discussed the financial aspects and the initial results, showing a promising path towards integrating deep network understanding into language models.

As a quick recap we used RAG to create a specifically formatted JSONL dataset file and uploaded it to the openAI fine-tuning cloud service. It resulted in a new chatGPT 3.5 variant model fine-tuned with my specific data:

Introduction: Local Fine-Tuning with Llama3

Building on the foundation laid in part one, this next segment shifts focus from cloud-based solutions to a more personal and cost-effective approach: local fine-tuning. In this part, we will explore the journey of recreating the fine-tuning process in a completely private, local environment using Llama3, an alternative to OpenAI’s models.

The transition to local fine-tuning addresses several key considerations such as cost, data privacy, and the ability to leverage local hardware. This approach not only democratizes the use of advanced AI models by making them accessible without substantial ongoing costs but also ensures complete control over sensitive data, a critical aspect for many organizations.

We will delve into the technical setup required for local fine-tuning, including the use of CUDA and NVIDIA GPUs, and discuss the challenges and solutions encountered along the way. Additionally, this discussion will provide insights into the performance comparison between cloud-based and locally fine-tuned models, shedding light on the effectiveness and efficiency of local resources in the realm of advanced AI training.

Stay tuned as we unpack the complexities and triumphs of bringing high-level AI fine-tuning into the home lab, making cutting-edge technology more accessible and customizable for network engineers and tech enthusiasts alike.

LoRA (Low-Rank Adaptation) is a transformative approach in the field of machine learning, particularly impactful when it comes to fine-tuning large language models (LLMs) like Llama3. LoRA is designed to update a small number of parameters within an existing large model, thereby enabling significant changes to the model’s behavior without the need to retrain millions of parameters. This technique is especially useful in scenarios where computational resources are limited, or where one wishes to preserve the original strengths of the model while adapting it to new, specific tasks.

The concept behind LoRA is based on injecting trainable low-rank matrices into the pre-trained weights of a model. These matrices are much smaller in size compared to the original model’s parameters but can effectively steer the model’s behavior when fine-tuned on a target task.

BitsAndBytesConfig and LoRA Configurations: In practice, this method involves configuring specific components such as BitsAndBytesConfig, which is used to manage aspects like quantization and computation precision. This helps in reducing the memory footprint during training. Meanwhile, the LoRA Config identifies the specific parts of the model architecture—like projection layers (up_proj, down_proj, etc.)—that will be adapted. These targeted adjustments ensure that the enhancements are focused and efficient, minimizing the disturbance to the model’s extensive pre-trained knowledge base.

The selection of target modules such as ['up_proj', 'down_proj', 'gate_proj', 'k_proj', 'q_proj', 'v_proj', 'o_proj'] is crucial and varies from one model to another, e.g., different targets would be chosen when adapting a Microsoft phi3 model.

ORPO (Optimized Recurrent Prompt Optimization) is another sophisticated technique used in fine-tuning. It revolves around optimizing the prompts that are fed into the model during the training process. This method is particularly beneficial when working with transformers and generative models where the input prompt can significantly influence the generated output.

The ORPO Trainer configures several key parameters:

The integration of ORPO with LoRA allows for a nuanced and highly effective fine-tuning process, focusing on both the inputs to the model and its internal parameters.

Just like fine-tuning the cloud with openAI, we will require a dataset – specifically a JSON list – .jsonl – file with the required structure to fine-tune Llama3 with OPRO. As a quick reminder the fine-tuning dataset structure for openAI chatGPT 3.5 fine-tuning looked like this:

[{{“messages”: [{“role”: “system”, “content”: ” “},

{“role”: “user”, “content”: “”},

{“role”: “assistant”, “content”: “”}]}

For Llama3 with ORPO we will use a system of rewards to fine-tune the model; that is to say a prompt, a chosen answer, and an ever so slightly, incorrect, rejected response. The generation of this data set is where RAG comes in: we can use an open-book style approach and use factual, domain-specific, dataset entries to “teach” the LLM about our information.

In my case I wanted to see if I could “teach” Llama3 specific details about the Cisco Validated Design for “FlexPod Datacenter with Generative AI Inferencing Design and Deployment Guide”; very specifically page 34’s VLAN table, and, even more specifically, the Out-of-Band (OOB) VLAN; VLAN 1020.

Here are the details about this VLAN right from the PDF:

Now, here is where the AI-fun begins. First, we will use this information as our RAG source and generated 25-50 unique prompts, chosen, and rejected answers. This will be the basis for our dataset. My testing, and at the risk of overfitting the Llama3 model with my information, indicated that having these 25 – 50 questions repeated about 10-20 times (500 – 800 sets in the dataset JSONL file) seemed to be enough to “teach” the Llama3 model. Here are a few examples:

{“prompt”: “Q: Read the following context and answer the question. Context: VLANs configured for setting up the FlexPod environment along with their usage. VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254. Question: What is the VLAN ID for the out-of-band management VLAN in the FlexPod environment? Answer:\nThe answer is:”,

“chosen”: “user\nQ: Read the following context and answer the question. Context: VLANs configured for setting up the FlexPod environment along with their usage. VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254. Question: What is the VLAN ID for the out-of-band management VLAN in the FlexPod environment? Answer:\nThe answer is:\nassistant\n1020\n”,

“rejected”: “user\nQ: Read the following context and answer the question. Context: VLANs configured for setting up the FlexPod environment along with their usage. VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254. Question: What is the VLAN ID for the out-of-band management VLAN in the FlexPod environment? Answer:\nThe answer is:\n1024\n”}

{“prompt”: “Q: Read the following context and answer the question. Context: VLANs configured for setting up the FlexPod environment along with their usage. VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254. Question: What is the name of the VLAN ID 1020 in the FlexPod environment? Answer:\nThe answer is:”,

“chosen”: “user\nQ: Read the following context and answer the question. Context: VLANs configured for setting up the FlexPod environment along with their usage. VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254. Question: What is the name of the VLAN ID 1020 in the FlexPod environment? Answer:\nThe answer is:\nassistant\nOOB-MGMT-VLAN\n”,

“rejected”: “user\nQ: Read the following context and answer the question. Context: VLANs configured for setting up the FlexPod environment along with their usage. VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254. Question: What is the name of the VLAN ID 1020 in the FlexPod environment? Answer:\nThe answer is:\nManagement-VLAN\n”}

Now that we have used to RAG to created our dataset; let’s move onto the local, private, free, fine-tuning aspect. Let’s take a look at my fine-tuning script:

import gc

import os

import torchThese lines import essential libraries. gc handles garbage collection to manage memory during intensive computations, os provides a way of using operating system dependent functionality like reading or writing to a file, and torch is the backbone for most operations involving neural networks.

from datasets import load_dataset

from peft import LoraConfig, get_peft_model, prepare_model_for_kbit_training, PeftModel

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

from trl import ORPOConfig, ORPOTrainer, setup_chat_format

from huggingface_hub import HfApi, create_repo, upload_fileThis block imports necessary classes and functions from various libraries:

datasets for loading and managing datasets.peft for preparing and configuring the model with LoRA adaptations.transformers for accessing pre-trained models and utilities for tokenization.trl for configuring and running the ORPO fine-tuning process.huggingface_hub for model management and deployment to Hugging Face’s model hub.if torch.cuda.is_available() and torch.cuda.get_device_capability()[0] >= 8:

attn_implementation = "flash_attention_2"

torch_dtype = torch.bfloat16

else:

attn_implementation = "eager"

torch_dtype = torch.float16Here, the script checks whether a CUDA-capable GPU is available and selects the appropriate data type and attention mechanism based on the device’s capability. This optimization ensures that the model uses the most efficient computation methods available, thereby speeding up training and reducing memory usage.

base_model = "meta-llama/Meta-Llama-3-8B"

new_model = "aicvd"These lines specify the base model to be fine-tuned (meta-llama/Meta-Llama-3-8B) and the name for the new, fine-tuned model (aicvd). This setup is crucial for initializing the fine-tuning process and managing the output.

In this section of the script, we configure the settings for BitsAndBytes and Low-Rank Adaptation (LoRA), which are critical for optimizing the fine-tuning process. Let’s explore what each configuration accomplishes and how it contributes to fine-tuning your LLM.

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch_dtype,

bnb_4bit_use_double_quant=True,

)BitsAndBytesConfig plays a pivotal role in managing how the model’s data is quantized and computed during training:

load_in_4bit: This setting enables 4-bit quantization, drastically reducing the memory requirements of the model by quantizing weights to 4 bits instead of the typical 16 or 32 bits. This allows for storing and processing model parameters more efficiently, especially beneficial when working with large models on hardware with limited memory.bnb_4bit_quant_type: The quantization type "nf4" specifies the algorithm used for quantization, which in this case stands for noise-free 4-bit quantization, designed to maintain accuracy despite the reduced bit depth.bnb_4bit_compute_dtype: This parameter ties the computation data type to the earlier checked torch_dtype, ensuring that all operations are performed in the most suitable precision available on the hardware (either bfloat16 or float16).bnb_4bit_use_double_quant: This enables a more robust quantization approach by applying quantization twice, further refining how data is stored and processed to preserve the model’s output accuracy.peft_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM",

target_modules=['up_proj', 'down_proj', 'gate_proj', 'k_proj', 'q_proj', 'v_proj', 'o_proj']

)LoRAConfig sets the parameters for Low-Rank Adaptation, which allows for efficient and targeted model tuning:

r: The rank of the low-rank matrices added to the model. A lower rank means fewer parameters to tune, which enhances efficiency. Here, r=16 indicates a modest number of parameters being adapted, balancing between flexibility and efficiency.lora_alpha: This parameter adjusts the scaling of the low-rank matrices. A higher lora_alpha can lead to more pronounced changes in model behavior from smaller parameter adjustments.lora_dropout: Applies dropout to the LoRA layers, a technique for preventing overfitting by randomly setting a fraction of the output features to zero during training.bias: Controls whether biases are included in the LoRA layers. Setting this to "none" indicates that no additional bias is applied, which can help maintain the original characteristics of the model while still allowing for adaptability.task_type: Specifies the type of task the model is being fine-tuned for, in this case, "CAUSAL_LM" (causal language modeling), appropriate for generating text based on the input provided.target_modules: Defines which parts of the model the LoRA adaptations are applied to. The selection of modules like 'up_proj', 'down_proj', and others focuses the fine-tuning on specific, critical components of the model’s architecture, impacting how it processes and generates text.These configurations are essential for refining the model’s capabilities and optimizing its performance, especially when dealing with specialized tasks and datasets in a resource-constrained environment.

In this section of our script, we prepare the tokenizer and the model, load the dataset, and perform some initial setup for training. Let’s break down these steps, starting with tokenization.

tokenizer = AutoTokenizer.from_pretrained(base_model)Tokenization is the process of converting text into a format that a machine learning model can understand, typically by breaking down the text into smaller pieces called tokens. These tokens can be words, subwords, or even characters depending on the tokenizer configuration.

The tokenizer here is loaded from a pretrained model’s associated tokenizer (base_model), which ensures that the tokenization scheme matches the one used during the initial training of the model. This consistency is crucial for the model to correctly interpret the input data.

model = AutoModelForCausalLM.from_pretrained(

base_model,

quantization_config=bnb_config,

device_map="auto",

attn_implementation=attn_implementation,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)base_model, which is configured to generate text in a causal manner (one token at a time based on the previous tokens).model, tokenizer = setup_chat_format(model, tokenizer)

model = prepare_model_for_kbit_training(model)file_path = "training_dataset.jsonl"

print("dataset load")

dataset = load_dataset('json', data_files={'train': file_path}, split='all')

print("dataset shuffle")

dataset = dataset.shuffle(seed=42)This part of the script is critical as it ensures the model is correctly set up with the optimal configurations and the data is prepared in a manner that promotes effective learning.

In this part of the script, we refine the dataset further by applying a custom formatting function and then splitting the dataset into training and testing subsets. Let’s break down these steps:

def format_chat_template(row):

role = "You are an expert on the Cisco Validated Design FlexPod Datacenter with Generative AI Inferencing Design and Deployment Guide."

row["chosen"] = f'{role} {row["chosen"]}'

row["rejected"] = f'{role} {row["rejected"]}'

row["role"] = role

return rowThis function format_chat_template is designed to prep the dataset specifically for ORPO (Optimized Recurrent Prompt Optimization). It formats each data point in the dataset by:

print("dataset map")

dataset = dataset.map(

format_chat_template,

num_proc=os.cpu_count() // 2,

batched=False

)format_chat_template function to each item in the dataset.False to ensure that the function is applied to each row individually rather than to batches of rows, which is important for maintaining accuracy in role-specific modifications.print("dataset train_test_split")

dataset = dataset.train_test_split(test_size=0.01)test_size=0.01 indicates that 1% of the dataset is reserved for testing, and the remaining 99% is used for training. This split allows you to train the model on the majority of the data while holding back a small portion to evaluate the model’s performance on unseen data.This structured approach to formatting and splitting the data ensures that the training process is both contextually relevant and robust, providing a solid foundation for the subsequent fine-tuning steps. The specific formatting and careful split also help in assessing the model’s ability to generalize beyond the training data while maintaining a focus on the expert knowledge it is supposed to emulate.

In this part of our script, we will set up and execute the training process using the ORPO (Optimized Recurrent Prompt Optimization) Trainer, which is specially designed to optimize prompt-based fine-tuning. Let’s delve into the configuration and the training process, emphasizing the num_train_epochs and learning_rate, as these are crucial parameters that significantly influence the training dynamics and outcomes.

orpo_args = ORPOConfig(

learning_rate=1e-4,

beta=0.1,

lr_scheduler_type="linear",

max_length=1024,

max_prompt_length=512,

per_device_train_batch_size=2,

per_device_eval_batch_size=2,

gradient_accumulation_steps=4,

optim="paged_adamw_8bit",

num_train_epochs=3,

evaluation_strategy="steps",

eval_steps=100,

logging_steps=100,

warmup_steps=10,

report_to="wandb",

output_dir="./results/",

)Learning Rate (1e-4):

1e-4 is relatively low, which means the model updates its weights incrementally. This cautious approach helps prevent the model from converging too quickly to a local minimum and allows it to find a better overall solution by exploring the loss landscape more thoroughly.Number of Training Epochs (3):

3 means each example in the training set will influence the model’s learning three times. For fine-tuning scenarios, where the model is already pre-trained and only needs to adapt to specific tasks, a small number of epochs is often sufficient.Beta (0.1):

Linear Learning Rate Scheduler:

Gradient Accumulation Steps (4):

trainer = ORPOTrainer(

model=model,

args=orpo_args,

train_dataset=dataset["train"],

eval_dataset=dataset["test"],

peft_config=peft_config,

tokenizer=tokenizer,

)

print("training model")

trainer.train()

print("saving model")

trainer.save_model(new_model)peft_config).new_model. This allows the fine-tuned model to be reused or deployed.This comprehensive setup and the detailed explanation of key parameters help in understanding how each component influences the fine-tuning process, enabling the model to efficiently learn domain-specific knowledge from the provided dataset.

In this final section of the script, we are taking steps to manage the system’s resources efficiently, reload components for further use, and integrate the fine-tuned adapters with the base model. Let’s break down what each step accomplishes:

del trainer, model

gc.collect()

torch.cuda.empty_cache()After the training completes, it’s important to release the resources utilized during the process. This block of code:

trainer and model objects, removing them from memory.gc.collect() to trigger Python’s garbage collector, which helps in reclaiming memory by clearing out unreferenced objects.torch.cuda.empty_cache() to clear the GPU memory cache that PyTorch uses to speed up operations. Clearing this cache releases memory that will enable the GPU to handle other tasks or subsequent operations more efficiently.tokenizer = AutoTokenizer.from_pretrained(base_model)

model = AutoModelForCausalLM.from_pretrained(

base_model,

low_cpu_mem_usage=True,

return_dict=True,

torch_dtype=torch.float16,

device_map="auto",

)

model, tokenizer = setup_chat_format(model, tokenizer)low_cpu_mem_usage optimize memory usage, return_dict ensures the model outputs are returned as dictionaries for easier handling, and torch_dtype and device_map configure the model for optimal performance on the available hardware.model = PeftModel.from_pretrained(model, new_model)

print("merge and unload model")

model = model.merge_and_unload().to("cuda")PeftModel.from_pretrained is presumably a method designed to integrate the fine-tuned parameters (LoRA adaptations) stored in new_model with the base model. This is a crucial step as it combines the general capabilities of the base model with the specialized knowledge encoded in the adapters.merge_and_unload() likely finalizes the integration and optimizes the model structure for performance, and .to("cuda") shifts the model back to the GPU for efficient computation.This entire sequence not only ensures that the model is optimally prepared for deployment or further evaluation but also highlights best practices in managing computational resources during and after intensive machine learning tasks. By clearing resources, reinitializing components, and effectively merging enhancements, you maintain system efficiency and ensure that the fine-tuned model reflects the intended enhancements.

Here is the hardware I am using:

I have access to an NVIDIA L4 environment with 24GB of VRAM. Here is the output of the orpo_tune.py code raw from the CLI:

The logs captured from the console provide a detailed record of the training progress, metrics, and configurations used during the fine-tuning session. Here’s a breakdown based of the images, highlighting key aspects and their implications for the fine-tuning process.

The WandB (Weights & Biases) logs captured during thefine-tuning session are crucial for understanding how the model is performing and iterating over its training cycles. Here’s a breakdown of the key components of the WandB logs and what they mean for your project:

WandB Dashboard Overview: WandB creates a comprehensive dashboard that tracks and visualizes numerous metrics during the model training process. This dashboard is essential for monitoring the model’s performance in real-time, understanding trends, and diagnosing issues.

Key Metrics Explained:

train/loss, eval/loss):

train/accuracies, eval/accuracies):

train/rewards/margins, eval/rewards/margins):

train/log_odds_ratio, eval/log_odds_ratio):

train/grad_norm):

train/learning_rate):

train/samples_per_second, train/steps_per_second):

The data captured in WandB not only aids in monitoring and refining the training process but also serves as a valuable record for future model iterations and audits. By analyzing trends in these metrics, you can make informed decisions about adjustments to the training process, such as tuning hyperparameters or modifying the model architecture. Additionally, sharing these insights through platforms like WandB allows for collaborative review and enhancement, fostering a community approach to model development.

remove_unused_columns=False to handle data more efficiently.save_embedding_layers to True suggests that embeddings are being saved post-training, which is vital for deploying the model with all its learned nuances.These logs not only provide a comprehensive look into the fine-tuning process but also serve as a crucial tool for debugging and optimizing the training session. The total runtime, detailed per epoch, helps in planning the computational resources and time required for such tasks, especially when dealing with large datasets and complex models.

By analyzing these logs, readers can gain insights into practical aspects of model training, from setup through execution to completion, ensuring they are well-equipped to manage their own fine-tuning projects effectively. This detailed record-keeping is essential for transparency, reproducibility, and iterative improvement of machine learning models.

The script is not finished as there are some final steps that upload the model (more on this laster) to HuggingFace (much like you would push your code to GitHub; instead we can push our models)

However, it is a good idea to test out model before we upload it and publish it publicly to HuggingFace. So, let’s take a quick break, and look at my inference testing code first.

As a control test we will also setup Ollama locally and host the base, unaltered, LLama3 to run our testing prompts against both Llama3 base model and our fine-tuned with domain specific OOB VLAN data, to ‘prove’ we have had an impact on the model and “taught” it about our domain specific data.

In the journey of fine-tuning an AI model, one of the most critical steps is validation. This involves testing the fine-tuned model to ensure it performs as expected, particularly in domain-specific scenarios it was trained on. To achieve this, I’ve designed a Python script, query_model.py, which not only tests the fine-tuned model but also compares its performance with the original, unaltered Llama3 model.

Importing Necessary Libraries:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

from peft import PeftModel, PeftModelForCausalLM

from torch.cuda.amp import autocast

from langchain_community.llms import OllamaThese imports bring in the necessary tools from PyTorch, Transformers, and LangChain, essential for model handling, tokenization, and utilizing hardware acceleration features.

Initialization and Model Loading:

model_dir = "./aicvd"

base_model_name = "meta-llama/Meta-Llama-3-8B"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

llm = Ollama(model="llama3")

tokenizer = AutoTokenizer.from_pretrained(model_dir)Here, we define the directory of our fine-tuned model and the name of the base model. The script checks for GPU availability to optimize computation. The Ollama object initializes the base Llama3 model, and the tokenizer is loaded from the fine-tuned model’s directory to ensure consistency in text processing.

Handling Model Compatibility:

try:

base_model_instance = AutoModelForCausalLM.from_pretrained(base_model_name, torch_dtype=torch.float16).to(device)

if len(tokenizer) != base_model_instance.config.vocab_size:

base_model_instance.resize_token_embeddings(len(tokenizer))

fine_tuned_model = PeftModelForCausalLM.from_pretrained(base_model_instance, model_dir)

fine_tuned_model = fine_tuned_model.to(device)

except Exception as e:

print(f"Failed to load fine-tuned model: {e}")

returnThis section attempts to load both the base and fine-tuned models, adjusting the token embeddings to match the tokenizer if necessary. This ensures that the models will understand and generate text correctly using the fine-tuned tokenizer.

Preparing the Test Questions:

questions = [

"What is the VLAN ID for the out-of-band management VLAN in the FlexPod environment?",

"What is the subnet for the out-of-band management VLAN in the FlexPod environment?",

"What is the gateway for the out-of-band management VLAN in the FlexPod environment?",

"What is the name for the out-of-band management VLAN in the FlexPod environment?",

"What is the name of the VLAN ID 1020 in the FlexPod environment?",

"What is the gateway address for the OOB-MGMT-VLAN",

"What is the IP subnet used for the OOB-MGMT-VLAN in the FlexPod environment??",

"What can you tell me about out of band management on the FlexPod?",

"I am setting up out of band management on the FlexPod what is the VLAN ID?",

"I am setting up out of band management on the FlexPod what is the subnet?",

"I am setting up out of band management on the FlexPod what is the gateway?"

]

A list of specific, domain-focused questions is prepared to evaluate how well the fine-tuned model has learned the specialized content compared to the base model.

Setting Up Output Files and Testing Functions:

file = "model_output.txt"

with open(output_file, "w") as file:

file.write("")This initializes an output file to log the responses from both models, ensuring that we capture and can review all outputs systematically.

Executing the Tests:

test_model("Fine-Tuned Model", fine_tuned_model, tokenizer, questions, device, output_file)

test_model_with_llm("Base Model", llm, questions, output_file)The testing functions are invoked for both the fine-tuned and the base models. Each function generates answers to the set questions, logs them to the output file, and also prints them, providing immediate feedback on the models’ performances.

By testing the models in this structured manner, we can directly observe and quantify the improvements the fine-tuning process has brought about. The comparison against the base model serves as a control group, emphasizing the enhancements in domain-specific knowledge handling by the fine-tuned model.

This rigorous testing protocol ensures that our fine-tuned model not only performs well in theory but also excels in practical, real-world tasks it’s been specialized for. This approach is essential for deploying AI models confidently in specialized domains like network management and IT infrastructure. The script we designed for testing the model is a comprehensive setup that includes comparing the performance of your fine-tuned model against a baseline model (Llama3) using the Ollama framework. Here’s a breakdown of the key components and their functions in this script:

test_model: This function iterates over the list of questions, generates answers using the fine-tuned model, and logs both the question and the answer to an output file and the console. It leverages PyTorch’s autocast for mixed-precision computation, optimizing performance during inference.test_model_with_llm: Similar to test_model, but it uses the Llama3 model via the Ollama framework to generate responses. This function allows for a direct comparison between the base and fine-tuned models under identical query conditions.ask_model function handles the actual querying of the model. It preprocesses the input question, executes the model inference, and processes the output to a human-readable form. The settings (max_length and num_beams) control the length of the generated response and the beam search process, which affects the quality and diversity of the generated answers.torch.no_grad() and autocast() helps in reducing memory usage and speeding up the inference, which is critical for testing large models efficiently.This script sets up a thorough testing environment that not only ensures your model functions as expected but also rigorously evaluates its performance enhancements. After testing, you’ll have concrete evidence of how the fine-tuning has impacted the model’s ability to handle queries related to your specific domain, justifying the efforts and resources invested in the fine-tuning process.

Here are some the results (shortened to be brief and prove the point) from the testing performed with the query_model.py script:

Testing Base Model:

What is the VLAN ID for the out-of-band management VLAN in the FlexPod environment?

In a FlexPod (F5-Fortinet-EMC-Pod) environment, the VLAN ID for the out-of-band (OOB) management VLAN is typically VLAN 3968.

(note – this is incorrect is is VLAN 1020)

Testing Fine-Tuned Model:

What is the VLAN ID for the out-of-band management VLAN in the FlexPod environment?

VLAN ID – 1020, Name – OOB-MGMT-VLAN, Usage – Out-of-band management VLAN to connect management ports for various devices. IP Subnet – 10.102.0.0/24; GW: 10.102.0.254.

(Not this is correct)

Back to our orpo_tune.py script – the very end includes some code to push the model files to HuggingFace.

First – we need a HuggingFace account as well as a write API key

Before we run our orpo_tune.py script we will first use the HuggingFace CLI to login with our token using the huggingface-cli login CLI command

Now that we’ve completed the fine-tuning and testing of the model, the final step in making your work accessible and reusable is to upload the trained model to Hugging Face Hub. Let’s break down this process and how it’s handled in your orpo_tune.py script.

Hugging Face Hub serves as a central repository where machine learning models can be hosted, shared, and even collaborated on, similar to how code is managed on GitHub. This platform supports a wide range of machine learning models and provides tools that make it easy to deploy and utilize these models.

Before you can upload the model, you need to specify where the model and its configurations are located:

# Define the path to your files

adapter_config_path = "aicvd/adapter_config.json"

adapter_model_path = "aicvd/adapter_model.safetensors"These paths point to the necessary configuration and model files saved during the fine-tuning process. These files include the adapter configurations which detail how the model has been adjusted and the actual model parameters saved in a format optimized for safe and efficient loading.

You also need to define the repository on Hugging Face Hub where the model will be uploaded:

repo_name = "automateyournetwork/aicvd"This repository path follows the format username/repository, which in this case indicates that the repository belongs to the user automateyournetwork and is specifically for the aicvd model. If this repository does not already exist, Hugging Face will automatically create it when you push your files.

Finally, the script uses the following commands to upload the model and tokenizer:

model.push_to_hub(repo_name, use_temp_dir=False)

tokenizer.push_to_hub(repo_name, use_temp_dir=False)push_to_hub method is part of the Hugging Face Transformers library. This method handles the uploading of your fine-tuned model and its tokenizer to the specified repository. The use_temp_dir=False argument directs the method to push directly from the current directories without creating a temporary directory, which can be useful for keeping the upload straightforward and managing storage usage efficiently.By uploading your fine-tuned model to Hugging Face Hub, you not only contribute to the broader AI community but also ensure that your work is preserved, accessible, and ready to be built upon. This step is crucial for promoting open science and collaboration in AI, allowing others to benefit from your effort and potentially improve upon it.

So all of this worked for me however when I tested inference a second time (we will get to that) from the model I uploaded / downloaded and tested with the same query_model.py script it failed and couldn’t find adapters_config.json – after a bit of trying to figure this out I settled for direct API calls to HuggingFace to upload these files as such. Continuing with our journey in deploying our fine-tuned model, we encountered a hiccup during a secondary inference test using the query_model.py script. Despite our earlier success, the model deployment hit a snag when the system failed to locate the adapters_config.json after downloading the model from Hugging Face. This is a common challenge when deploying models that require specific configurations or additional files not typically handled by standard push methods.

To resolve the issue of missing configuration files during the model deployment phase, a more direct approach was adopted to ensure all necessary components of the model were properly uploaded and accessible on Hugging Face Hub.

To ensure that all relevant files are present and correctly linked in the repository, we utilized the HfApi client, provided by Hugging Face. This approach allows for finer control over file uploads, ensuring that each component of the model’s ecosystem is correctly positioned within the repository.

# Initialize the HfApi client

api = HfApi()

# Ensure the repository exists

create_repo(repo_name, exist_ok=True)Here, HfApi is initialized to interact directly with the Hugging Face Hub API. The create_repo function checks if the specified repository exists or creates it if it does not, with exist_ok=True ensuring that no error is raised if the repository already exists.

Once the repository setup is verified, the next step is to upload the essential files manually to ensure they are properly included in the repository.

# Upload files individually

api.upload_file(

path_or_fileobj=adapter_config_path,

path_in_repo="adapter_config.json",

repo_id=repo_name,

repo_type="model"

)

api.upload_file(

path_or_fileobj=adapter_model_path,

path_in_repo="adapter_model.safetensors",

repo_id=repo_name,

repo_type="model"

)

api.upload_file(

path_or_fileobj="aicvd/training_args.bin",

path_in_repo="training_args.bin",

repo_id=repo_name,

repo_type="model"

)Each upload_file call specifies:

path_or_fileobj: The local path to the file that needs to be uploaded.path_in_repo: The path within the repository where the file should be stored, ensuring it aligns with expected directory structures for model deployment.repo_id: The identifier for the repository, usually in the format “username/repository”.repo_type: Specifies the type of repository, which is “model” in this case.Using direct API calls for uploading files provides several benefits:

This approach not only solved the immediate problem of missing configuration files but also reinforced the importance of a thorough deployment strategy. Ensuring that all components of your AI model are correctly uploaded and configured in Hugging Face Hub is crucial for enabling smooth, error-free deployment and usage across various platforms and applications. This method of file management via direct API calls is a valuable technique for anyone looking to deploy complex models reliably.

Now if you check your HuggingFace account you will see your model:

The details of which include a README.md I suggest you complete with as much information as possible. This is known as the model card.

There is a Files and Versions tab – what is so neat is that it employs the Git system for version and source control. As we add parameters or make adjustments or re-train the model we can keep a full history and others can use and contribute to our model.

Now onto the second round of testing. In a completely new folder I copied the query_model.py file and then git lfs clone my model from HuggingFace locally to re-test to confirm my uploaded model had all the working parts and my inference would work.

And the good news is that I got the exact same answers and quality responses from the query_model.py script from this fresh copy from the cloud.

Summary

In the not-too-distant future, imagine a world where the democratization of artificial intelligence has not only become a reality but a cornerstone of societal innovation. In this utopia, open-source models serve as the bedrock for endless creativity and specialized knowledge expansion across various domains. This vision, inspired by the practices detailed in a technical blog and fine-tuning exercises with Llama3 and other large language models, embodies a transformative shift in how we interact with, manipulate, and benefit from technology.

The implications for networking and technology are profound. With the ability to fine-tune open-source models on domain-specific data, individuals and organizations can enhance network management systems, optimize performance, and predict network failures before they occur. This capability extends to dynamically generating network configurations and security protocols, tailoring systems to be both robust against threats and efficient in performance.

In the realm of document management and office automation, imagine a world where AI models understand the context and nuances contained within PDFs, Word documents, spreadsheets, and HTML pages. Here, AI assists in real-time, offering suggestions, summarizing information, and even generating entire reports based on a dataset or a spreadsheet analysis. This would not only increase productivity but also elevate the quality of work by minimizing errors and standardizing formats across global teams.

The educational and informational realms, represented by platforms like YouTube and GitHub, stand to gain immensely. AI models, fine-tuned to understand and generate content based on specific subjects or programming languages, could offer personalized learning experiences and code suggestions. These models could automatically translate video content, generate accurate subtitles in multiple languages, or produce tailored tutorials based on a user’s learning pace and style.

In software development and IT operations, the integration of fine-tuned AI models with REST APIs promises enhanced automation and smarter systems. These models could dynamically interact with JSON outputs and inputs to not only detect and alert about anomalies but also suggest optimizations and improvements in real-time, thereby streamlining development workflows and boosting system reliability.

As we look to the future, it’s clear that the broad adoption of fine-tuning techniques, as discussed and demonstrated in our ongoing blog narrative, could lead to a world where AI is not a distant, monolithic figure but a customizable tool that is as varied and as accessible as the smartphones in our pockets. This world would be marked by a significant reduction in barriers to entry for AI-enhancements, empowering even the smallest entities to leverage cutting-edge technology.

As we delve into the transformative potential of AI and its democratization, it’s vital to acknowledge the power of open-source resources in fostering innovation and learning. The blog you are reading is deeply inspired by the practical applications and experiments housed within the fine_tune_example repository on GitHub. This repository is not just a collection of code; it’s a launchpad for curiosity, experimentation, and personal advancement in the field of AI. By making the RAFT (Retrieval Augmented Fine-Tuning) code available, the repository aims to lower the barriers for enthusiasts and professionals alike, empowering them to adapt, enhance, and apply these models within their specific domains.

The GitHub repository serves as a foundational step for those interested in exploring how fine-tuning can be tailored to specific needs. It provides a transparent view into the process, allowing others to replicate, modify, and potentially improve upon the work. This open accessibility ensures that anyone, regardless of their geographical or professional background, can begin their journey in customizing AI to understand and interact with domain-specific data more effectively. It’s about turning open-source code into a personal toolkit for innovation.

Furthermore, the spirit of sharing and community continues through platforms like Hugging Face, where the fine-tuned models are hosted. This not only makes state-of-the-art AI more accessible but also invites collaborative enhancement and feedback. The linked YouTube channel extends this educational outreach, providing visual guides and tutorials that demystify the process and encourage practical engagement. Each video, blog post, and repository update is a stepping stone towards building a community of knowledgeable and empowered AI users and developers.

These resources collectively forge a path toward a future where AI technology is not just a tool for the few but a widespread asset available to many. They inspire us to learn, innovate, and contribute back to the community, ensuring that the journey towards an AI-augmented future is taken together. Let’s embrace these opportunities to expand our horizons, enhance our capabilities, and drive forward with the collective wisdom of a community at our backs.

In conclusion, the future where every individual and organization can mold their own AI models to fit their specific needs is not just feasible but is on the horizon. This democratization will foster a new era of innovation and efficiency, characterized by unprecedented personalization and interaction with technology. The implications extend beyond mere convenience, suggesting a profound impact on how we work, learn, and interact with the digital world. This vision, grounded in today’s advancements and explorations, sets the stage for a more inclusive, intelligent, and interconnected global community.

John Capobianco

May 11, 2024

DevNet Snack Minutes Episode #63!

I returned to The Art of Network Engineering Podcast!

Check it out!

mind_nmap (this link opens in a new window) by automateyournetwork (this link opens in a new window)

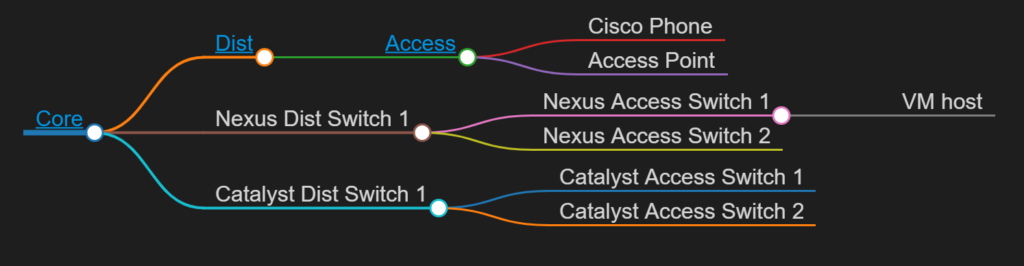

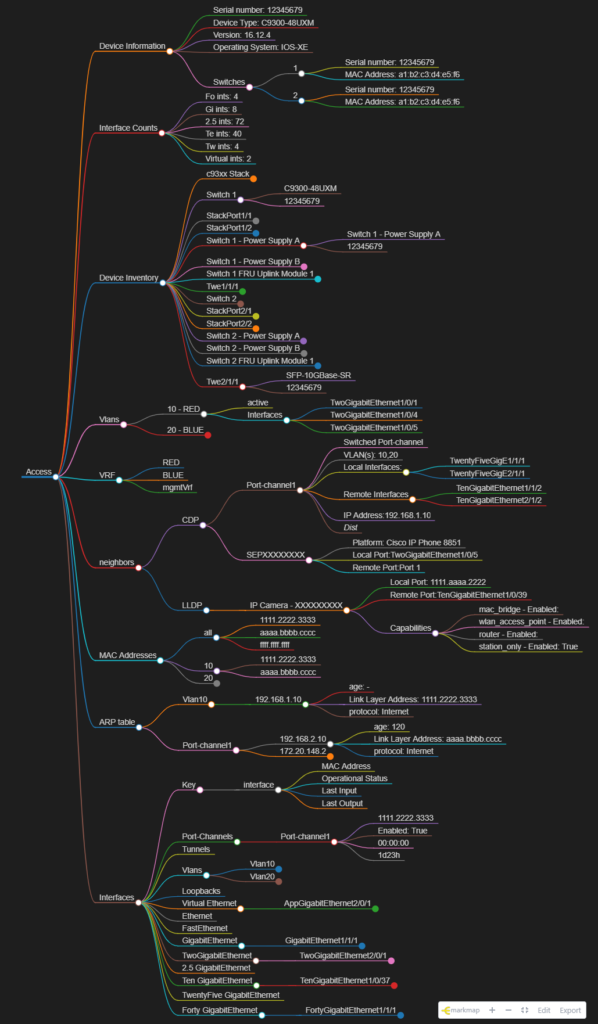

An automated network Mind Map utility build from markmap.js.org