This was the most fun I’ve ever had on a podcast – I hope you enjoy it as much as I did!

Shut / No Shut – With the Click of a Button

I won!

Thank you to Cisco DevNet and everybody in my community who has supported me over the years!

I won!

Automate Your Network – The Educative.io Online Course!

I am so very happy to announce after 8 months of development Automate Your Network is now available on educative.io as an online course (early access) !

Automate Your Network – Learn Interactively (educative.io)

My first Packet Pushers Blog!

Check it out!

Infrastructure as Software – Applying A Software Design Pattern to Network Automation!

Applying A Software Design Pattern To Network Automation – Packet Pushers

Tonight – Live on YouTube with Du’An “Lab Every Day” Lightfoot !

Clippy 3D !

Show IP Interface Brief – Reimagining in 3D

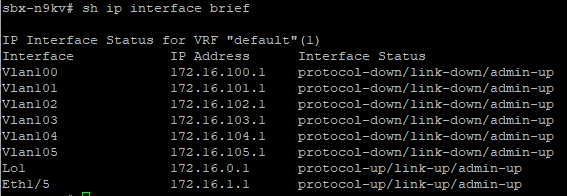

Remember this CLI output?

Using real network data I’ve reimagined the Show IP Interface Brief command as an Interactive 3D World!

Complete with indicators making it easy to see if an Interface is Healthy or Not!

https://www.automateyournetwork.ca/wp-content/uploads/verge3d/1170/IP_Interface_Brief.html

Merlin 3D: Cisco Product Security Incident Response Team (PSIRT) 3D Report!

I have been very silent on this blog lately – apologies – your best way to follow my development work now is likely Twitter or YouTube

However! I have been using Blender to make 3D Animations from Network State Data

I’ve recently figured out how to make these animations Web-ready !

Check it out! This is the PSIRT Report for the Cisco DevNet Sandbox Nexus 9k as a 3D Blender

Click here for the full page version – Verge3D Web Interactive (automateyournetwork.ca)

Much more to come!

Merlin Feature: WebEx #chatbots with pyATS

With all the big WebEx news – including a new logo – I wanted to revisit the basic #chatbots I have working using pyATS, Python requests, and the WebEx API after the conversation came up in the #pyATS WebEx Community space today:

First, let’s take a look at what this does, and this is not limited to Merlin; any pyATS job has this capability

If you create the pyats.conf file as Takashi suggests and add the [webex] information it will enable the pyATS job to report the job summary into the WebEx space you provide the config file.

This looks something like this inside of WebEx:

This in itself is pretty handy! And all you need to do is go to the Cisco WebEx for Developers portal and either make a Bot under My Apps

Or, right from the browser, grab one of the 12 hour tokens

The easiest way to get one of these is to go to the Documentation

Find the API Reference

Find Messages

Pick POST

COPY THIS BEARER TOKEN

Paste that into your pyats.conf

But how do I get the Room / Channel / Space ID?

If you browse to Rooms

You can GET your current Room list

This will give you the JSON list – here is the Merlin Room ID

Thats it you are ready to connect your pyATS jobs for a job summary as a WebEx message!

Adding Network State Data

With the above foundational WebEx integration with pyATS and WebEx’s simplicity I thought I would integrate a few sample commands into a Merlin pyATS job for the community to see how you can send Network State data to WebEx!

I want the message to be in Markdown so I am going to use a Jinja2 template to craft the JSON we can POST with Python requests after pyATS has parsed or learned the function

We don’t need a lot to make this happen either here is everything I import

- Update – I’ve also come to discover we need 1 more import and pip install requests_toolbelt in order to attach files to WebEx messages

We setup our WebEx room and token (12 hour or bot) as variables we can call later

The general_functionalities are important these are object oriented code that gets reused per pyATS learn or parse library call.

Then for this example I will do 2 learn functions, platform and routing and see if I can transform real network state data into meaningful WebEx messages

I tell Python where to find the Jinja2 templates and setup a variable I can use later to load said templates

We then setup our pyATS framework and connect (testbed.connect) to our topology

Again the testbed file looks like this

Now that we have connected we can begin our Test Steps ultimately looping (for) over each device in our topology (testbed)

Yes in this Sandbox there is only 1 device but this could scale to X devices. Just add them to the testbed.

Now we can learn platform

As of right now we have the following JavaScript Object Notation (JSON) data inside the self.learned_platform variable

Our goals:

- Send a log of our pyATS Merlin job to a WebEx Room or Individual

- Send this data as a human friendly message

- Create an XLSX spreadsheet we can attach to our message

Now we start our test steps

We will get a boolean pass/fail from the Create CSV and Sent to WebEx WebEx step

Next I set up a few variables – namely the Jinja2 references, the directory for the XLSX file, and the file name.

Also – for attachments we will declare another variable, the MultipartEncoder with the information required to attach the Learned_Platform.csv file

Next we template the .xlsx file from the Jinja2 template

Which looks like this:

That renders the file that looks like this

We will use 2 more Jinaj2 templates for the actual message we will send. Because The JSON body we post to WebEx is a single line, and in Markdown a header row starts with a # symbol, to avoid making the whole thing a header we will send it first.

Here is the line in Python

And the matching Jinja2 template

Remember, we are sending a long single line / string, as markdown, so if we want multi-line we need to add <br/> the Markdown linebreak command

Here is how we send the header

Which looks like this in WebEx:

Now let’s go ahead and template the Markdown

Which looks like:

Important! I had to “trim” this from what is in the “full” Markdown as there *is* a character limit so watch for that!

But that is also why we can attach the full CSV

So go get #chatbotting using real network state data!

Reach out if you hit any snags and watch for the full development video!