I’ve been on about a three year journey with network automation and while I have had great personal and technical success – my organization and most of those outside my immediate day-to-day circle are still shackled to the ‘old’ way of doing things (primarily the CLI).

This year I decided to start a new program – a training session for those outside of my small development team – primarily targeted at the “operations” staff who can benefit the most from automation and infrastructure as code. This includes network operators, monitoring / NOC team members, IT Security staff, other developers, compute (server / storage) teams; everybody is welcome. We divided the calendar up into different teams with different recurring timeslots.

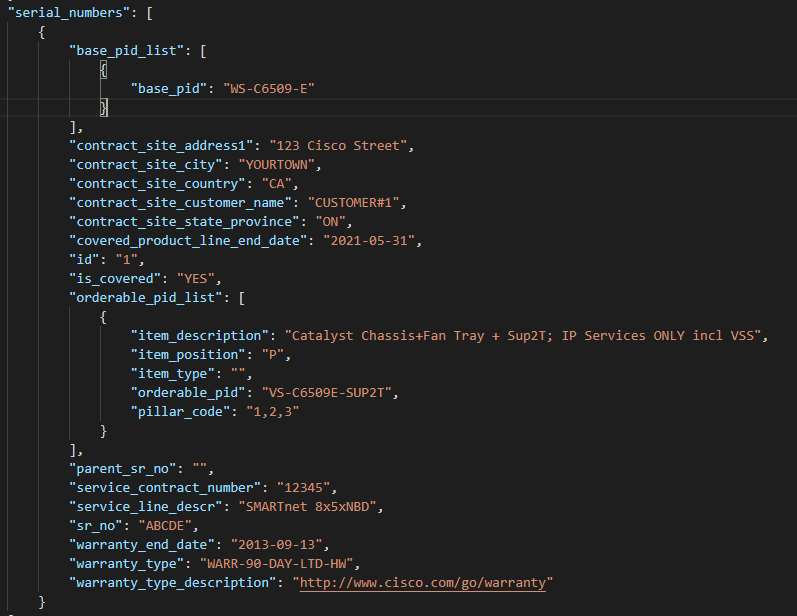

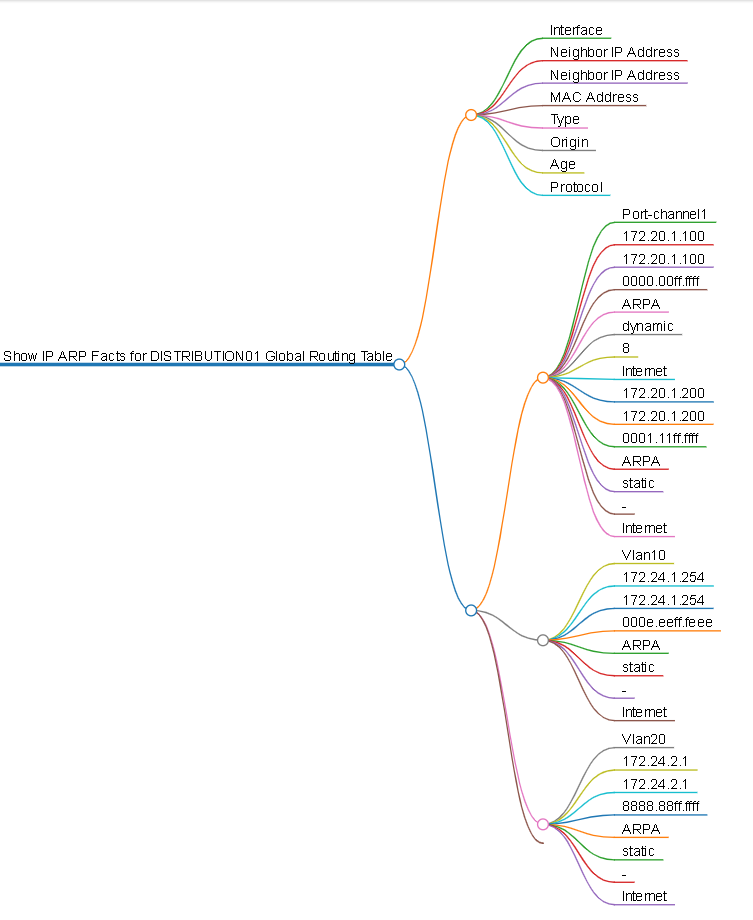

In advance I had written and tested a bunch of Code for the Campus Core – Ansible Facts and Genie parsed show commands – transforming the output into business-ready documentation. My plan was simple enough:

1. Ensure everybody was on the same page and had the same toolkit

* VS Code

* Git

* Azure DevOps repository bookmarked

* Various VS Code extensions

* A basic overview of main vs working branches in Git

* A basic outline on Ansible, YAML, Jinja2, and JSON

2. An operator would create a working development branch – in our case the Distribution Layer – so dist_facts Git branch.

* This operator ‘drives’ the whole session sharing their screen

* Step by step, line by line, refactoring (fancy way of saying copy-pasting) working code from the Core and updating it for the Distribution Layer as necessary

* Git clone, add, commit, push, and pull performed in both VS Code and Linux CLI

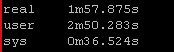

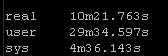

* Ansible playbook executed with a –limit against one building, then at scale after validating output

* Thorough tour of the JSON, YAML, CSV, MD, and HTML files after each run

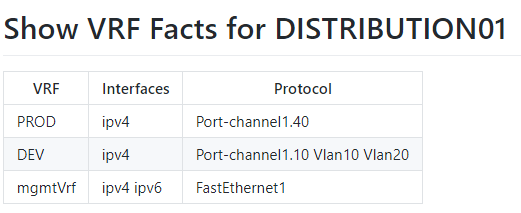

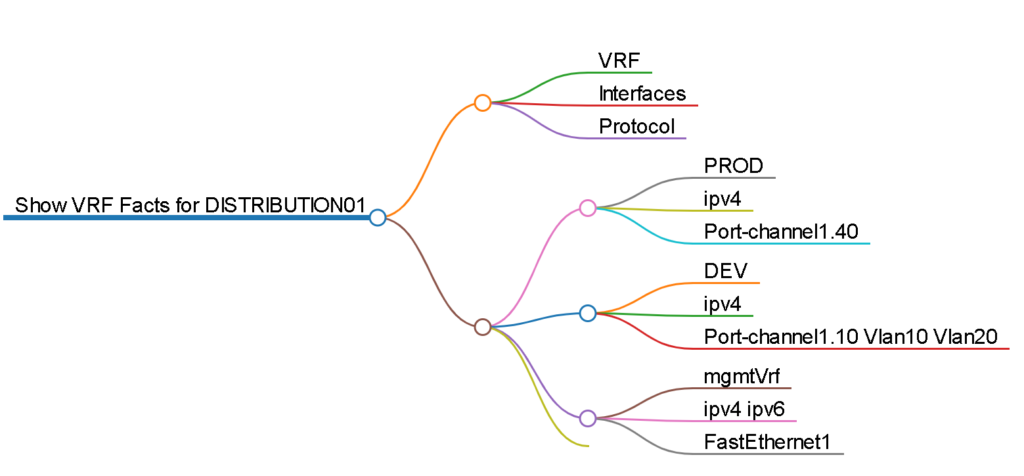

3. Work through Ansible Facts and various Genie parsed commands to build up a source of truth

So far it has been a very successful approach with the two teams adopting Marvel superhero teams (TEAM: CAPTAIN AMERICA and TEAM: IRON MAN respectively) allowing me to create memes like this:

Anyway – back to the point – today we had the following exchange:

Me: “So – we just parsed the show etherchannel summary CLI command and transformed the output into CSV files – amazing right! Any questions?”

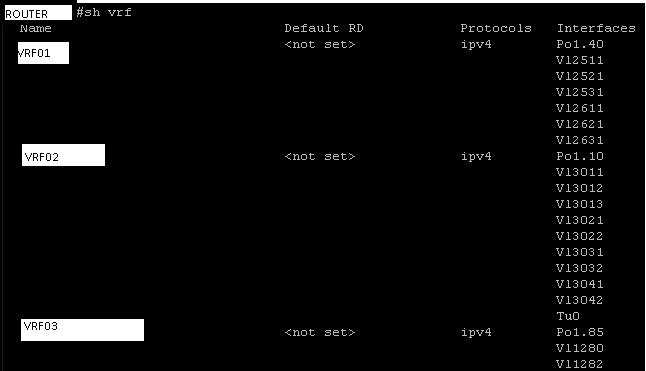

Operator catching on quickly: “We use the show interfaces trunk command often to track down what VLANs are being carried on which interfaces – can we transform that into a CSV?”

Me, excited and proud of the Operator: “Amazing question and I’m glad you brought up a practical example of a command you use in the field all the time we can maybe transform into something a little more useful than CLI output!

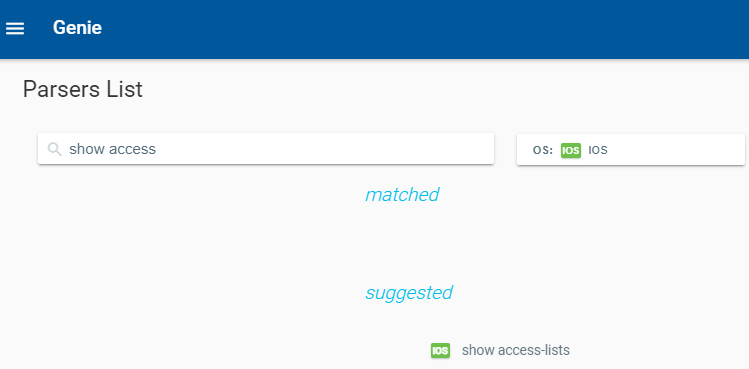

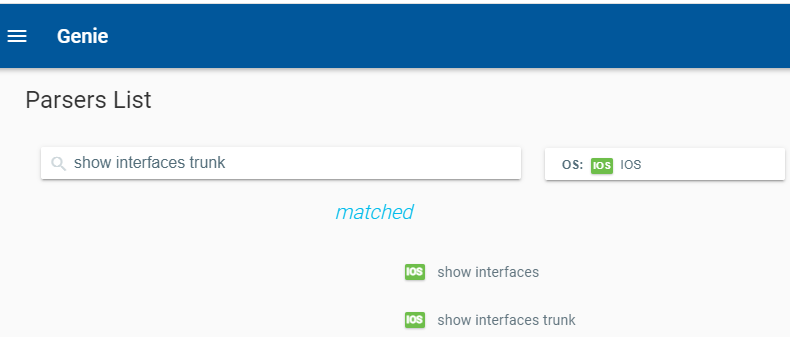

Launch the Genie parser search engine (under available parsers on the left menu) and let’s see if there is a parser available

Bingo! Let’s do it!”

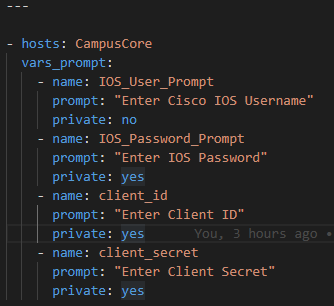

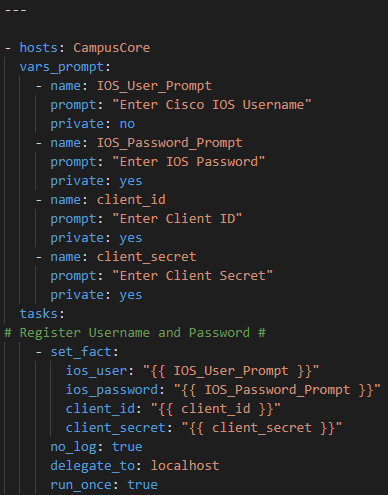

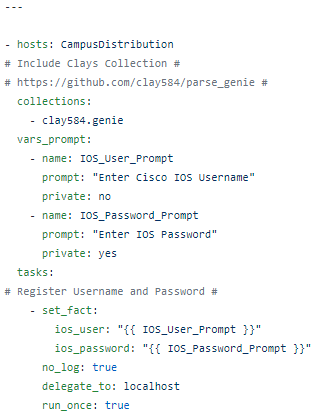

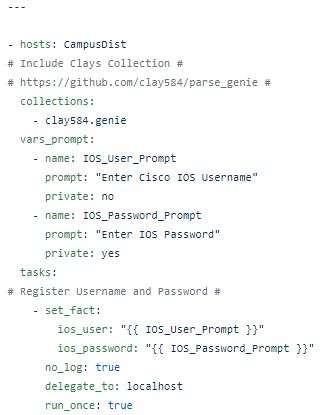

The playbook

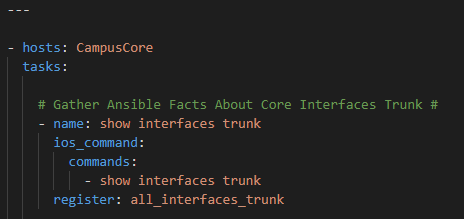

In this example we are targeting the Campus Core.

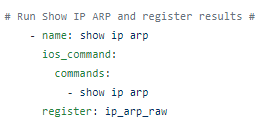

The playbook is simple enough

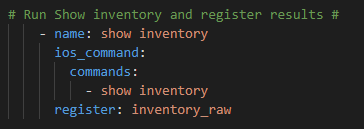

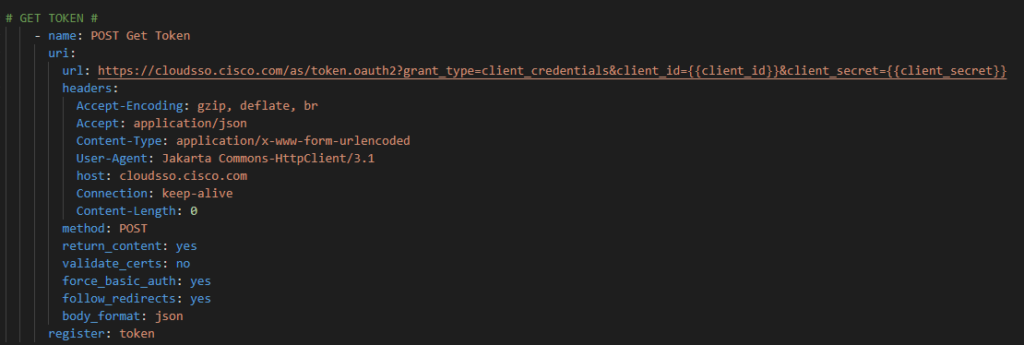

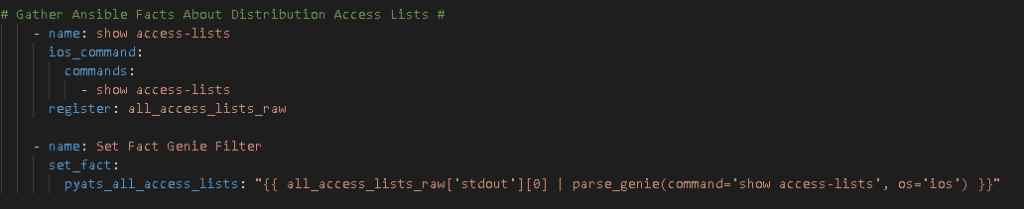

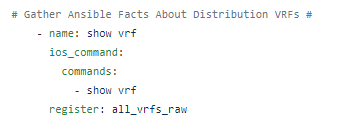

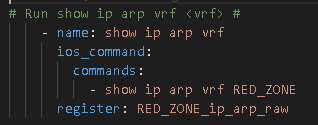

Use the Ansible ios_command module to issue and register the show interfaces trunk command

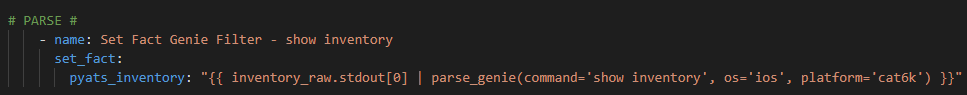

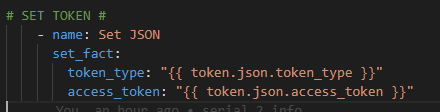

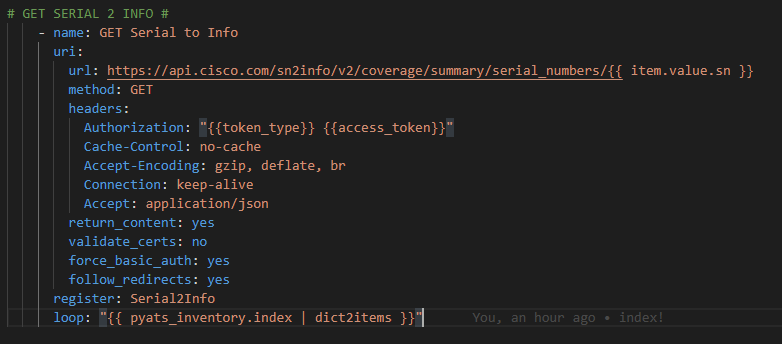

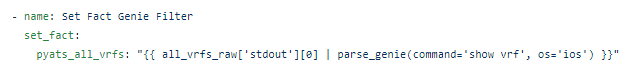

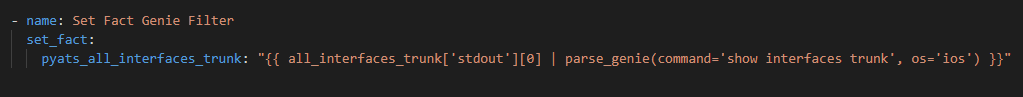

Next, using the Genie filter plugin

Filter the raw variable and register a new variable with the Genie parsed results

Note you need to pass the parse_genie filter two arguments the command itself and the appropriate Cisco operating system

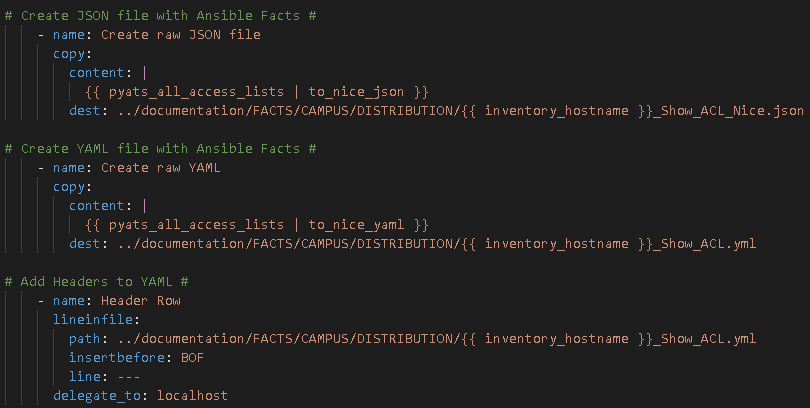

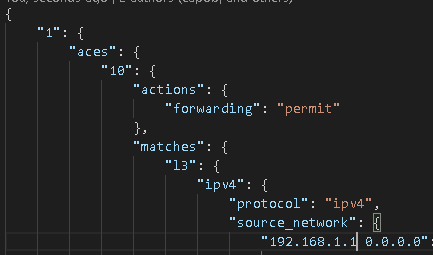

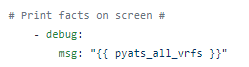

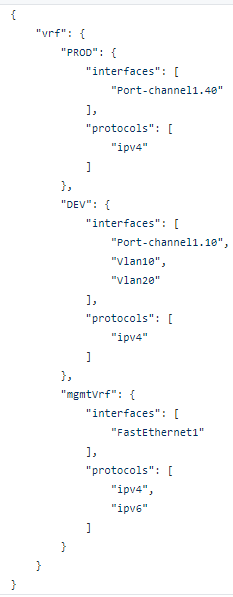

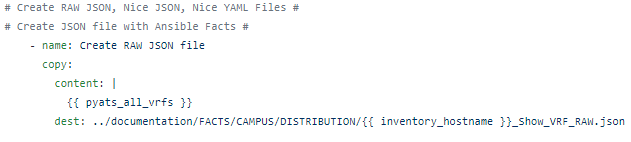

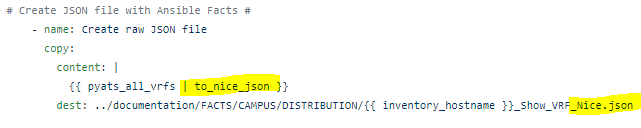

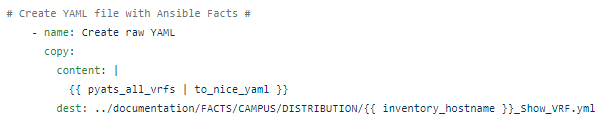

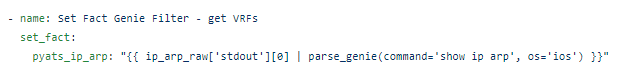

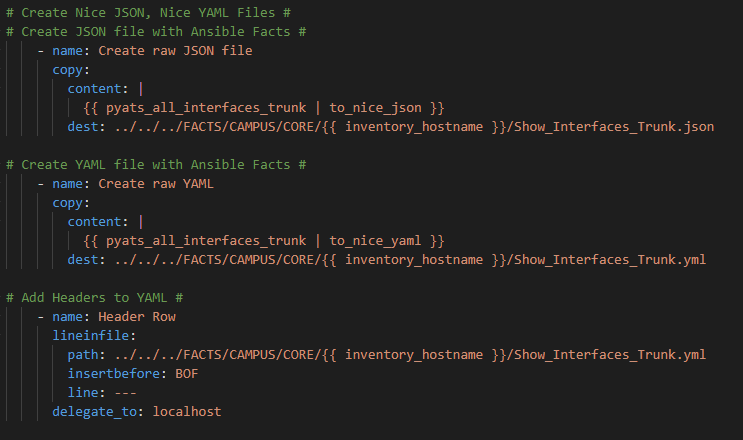

Next I like to create a Nice JSON and Nice YAML file with the parsed results as follows:

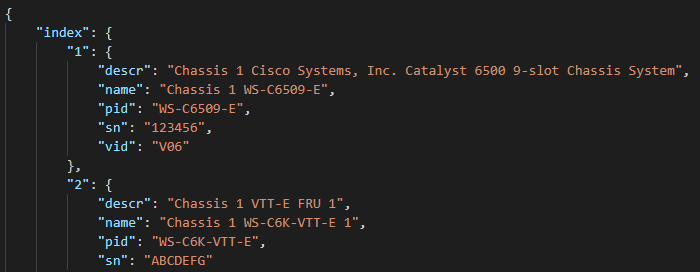

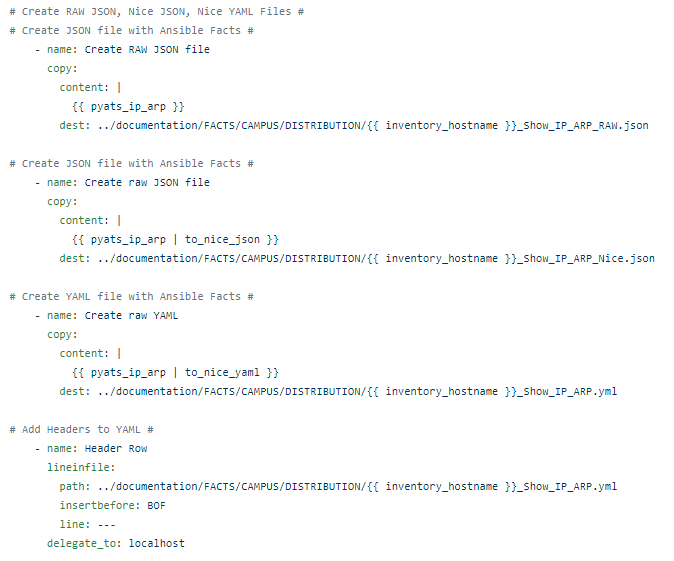

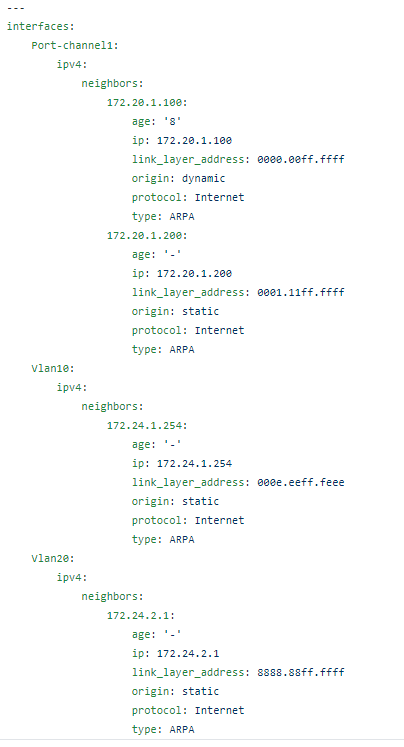

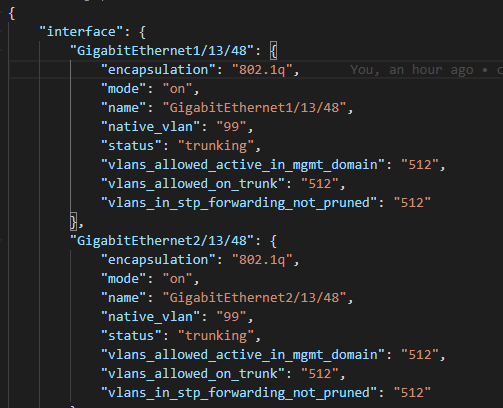

Which look like this:

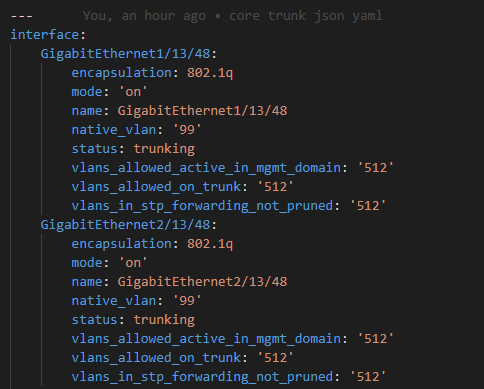

And this:

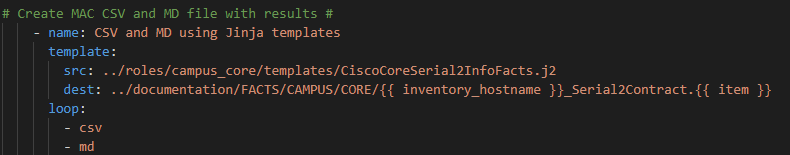

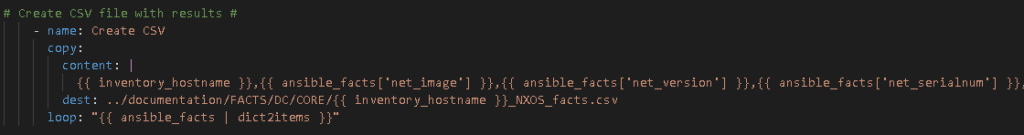

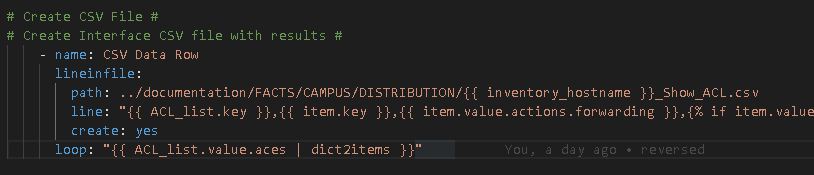

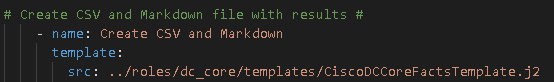

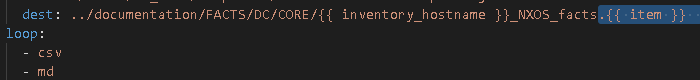

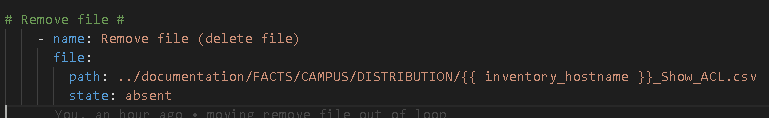

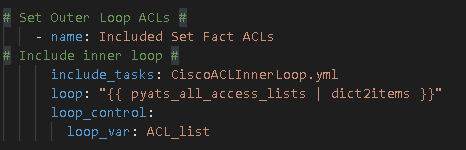

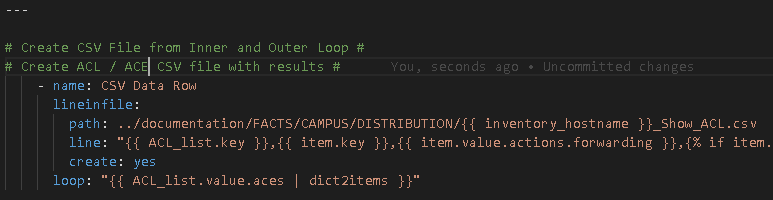

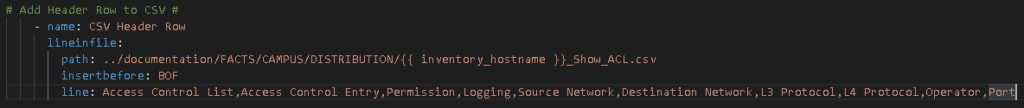

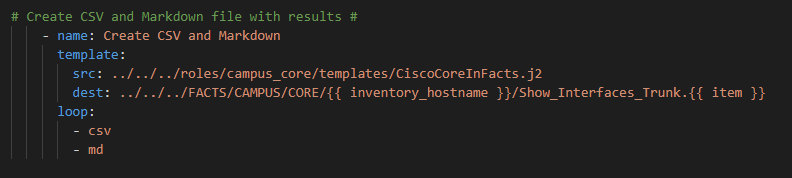

I then use a loop to create a CSV and MD file from a Jinja2 template

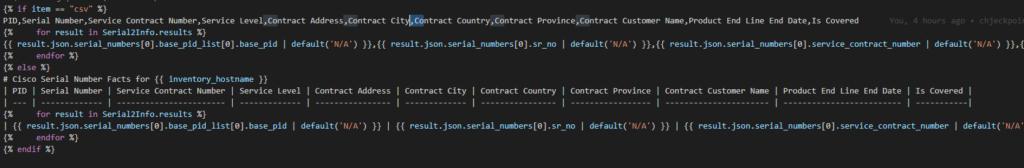

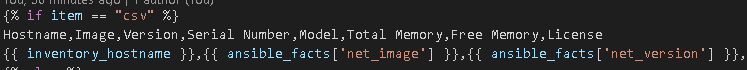

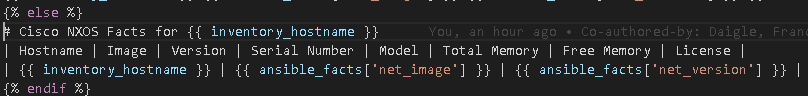

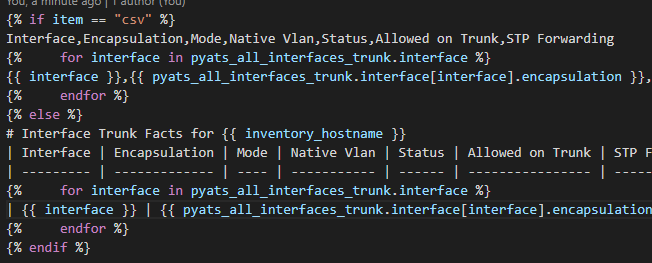

The Jinja2 Template looks like this

Couple things to hightlight:

* We challenge the current iteration of the Ansible loop and when it is on “csv” we template a CSV file format otherwise (else) it will be “md” and we template a markdown file

* The For Loop is over each interface in the results

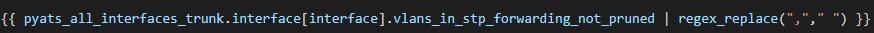

* Be careful with the CSV file you need to regex_replace the comma out of results because they are comma-separated which will throw off your CSV file. The markdown does not require any regex.

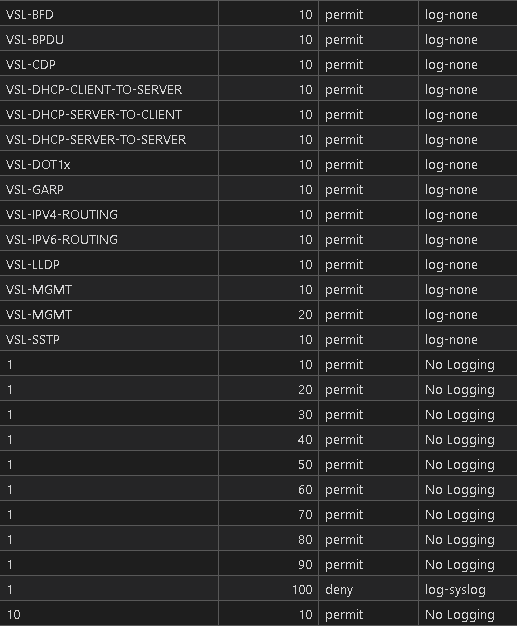

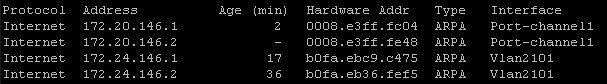

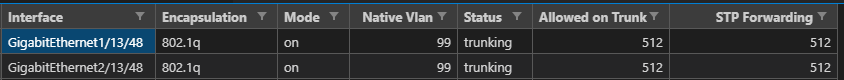

Which results in this amazing sortable, searchable, filterable, version controller, source controller, truthful, fact-based CSV file:

Now this example is just the singular logical Core but we will quickly refactor the code next week in our next session together and the operator who wanted this playbook will get a chance to write it ! Then we will have the interface trunk information for the entire campus automatically in spreadsheets!

The moral of this story is to collaborate. Ask your front-line operators how automation can help them. Do they have any frequently used or highly valuable CLI Commands they want transformed into CSV format? Would Ansible facts help them? And then show them how to do it so they can start writing these playbooks for themselves.